Why I remain a GenAI sceptic

Wherein I "express frustration with the pervasive and often unnecessary integration of generative AI into various aspects of life" (as summarised by Apple) and share some of the worst uses of AI that I've come across.

I've had these thoughts going around in my head for a good while now, and I wanted to write down how I feel about this. I don't expect this post to change anyone's opinions or actions, but... venting is useful sometimes, you know?

It's been almost three years since ChatGPT launched, and man, am I sick of generative AI. The discourse is tiresome (and don't worry, I do recognise the irony of me saying that in a blog post contributing to said discourse). The tech is pretty damn impressive, but I feel like for every positive thing that has come out of it, there's at least a few hundred negative things.

And that's the kicker -- I can't say that it's all bad. As much as we shitpost about "prompt engineers", I have seen people get genuinely useful results out of LLM tools for software engineering work, and I could probably do the same if I cared about it.

But I just can't bring myself to care. Between the tech industry trying to shove AI features into our faces at every possible moment, and the rest of the world filling up with machine-generated dreck... Any interest I had in this tech, or goodwill towards it, has long since evaporated.

And that's without even considering the negative factors that people usually talk about, like the energy consumption of training and inference, the questionable provenance of the training data, the impact of scrapers on the internet, or the devaluation of art.

I just want off Sam Altman's wild ride.

Please Get AI Features Out Of My Face

Everything in the tech industry has to involve AI now. Normally, before you invest in R&D for a thing, you might ask some questions, like "does this make sense?" or "will this deliver value to our users?" or "will we see a return on this?" -- but when AI comes into the picture, all bets are off. It must be done.

If you can't think of anything specific to your product, then just add a summarise button. People love summaries, it seems.

Uber Eats now has a 'helpful' feature where if a restaurant hasn't provided a description for an item, they'll sometimes just feed the item name into an LLM and ask it to generate one. As a picky eater who relies on these, this is the stuff of nightmares for me.

Back in February, I was at the huge National Exhibition Centre in England. It was the week just before the Crufts dog show, so there were a lot of bizarre dog-related ads around the place.

Two of them stood out as the funniest, and I really can't decide which one is better.

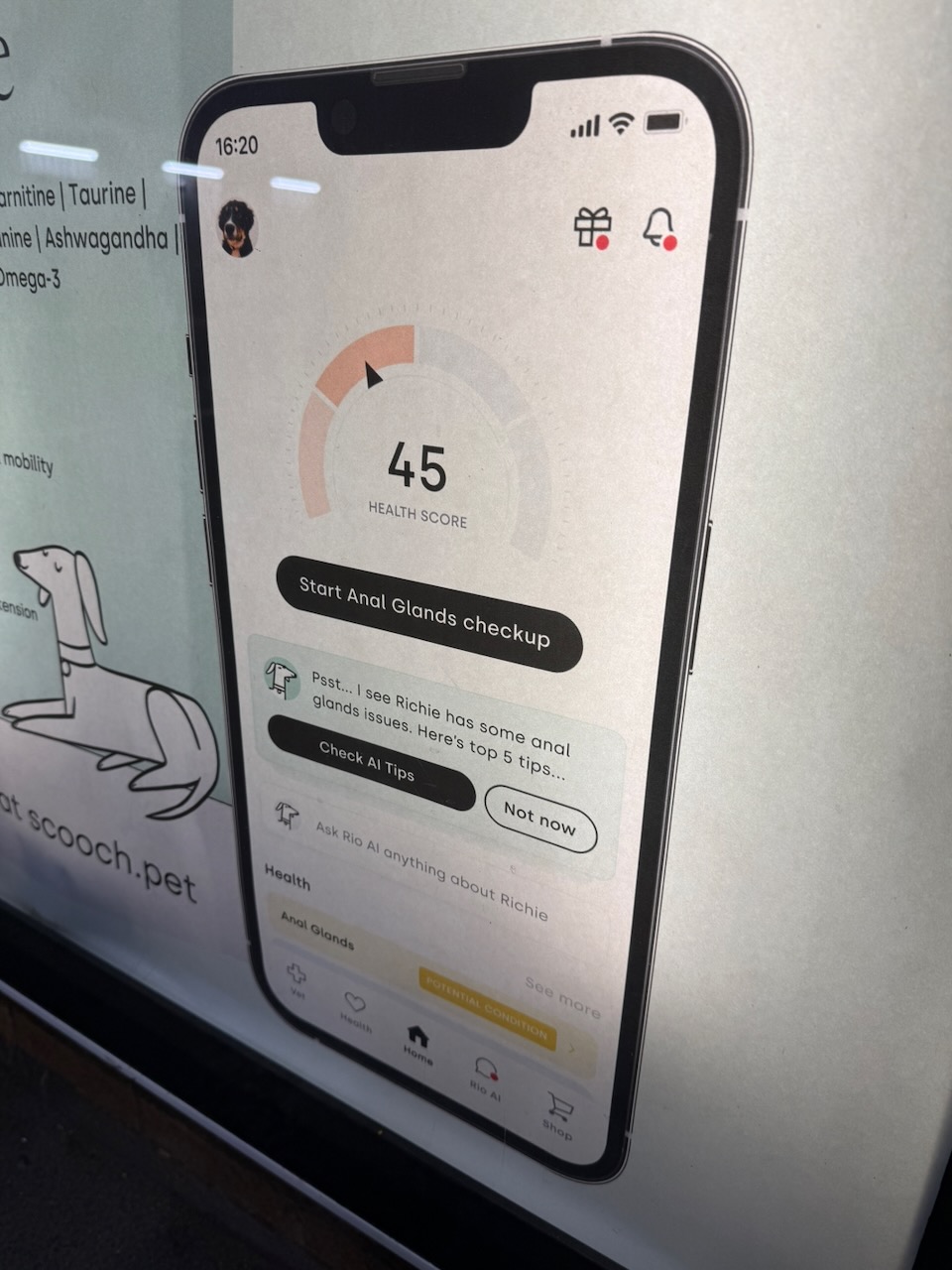

There was this poster for dog food that inexplicably has an app. With AI.

Why, yes, I do want to "Check AI Tips" for the anal glands issues that my dog food's app has somehow detected. This is a normal thing that normal people do.

Everything in this screenshot is so ludicrous that I suspect that the person tasked with designing this was fully aware of how this is bullshit, and just decided to have some fun.

As for the other one... Well, it's not AI-related, but it's still a very strong contender.

All over the NEC was this ad about canine castration with the tag line "There's more than one way to crack a nut", accompanied by a nutcracker and a walnut. The budget was so big that they even had a specially formatted version with slow-motion video just for the circular LED display in the car park.

But yeah, I got a little sidetracked. This AI brain rot just pervades everything. Applications and software libraries that I've used for years are suddenly "AI-powered" and "designed for AI".

Sometimes I have to write web applications with Spring, an Enterprise Java™ web framework that I loathe because it is mind-numbingly complex. On a lark, I went to their homepage to see if they had succumbed. Sure enough: the very first thing on there is Spring AI.

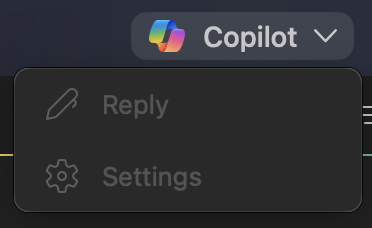

At work, we use Outlook for email. The most recent Outlook for Mac update added a Copilot button to the toolbar which you cannot hide. The functionality is disabled at an organisational level, so clicking on it just shows you two greyed-out options, but the button is still there, reminding you that AI is here.

All of the useful options on the toolbar have indistinguishable monochrome icons (since that's trendy at the moment), but this completely useless button gets a full-colour icon.

I also use IntelliJ IDEA for writing code. Last year, they added AI-based single-line code completion which runs on your device, and I decided to try it out. Sometimes it gave me the exact thing I was going to write, and sometimes it was really wrong in ways that just made me laugh, so I didn't bother turning it off.

As of a recent update, they added the ability to complete multiple lines (which I've turned off), and also seemingly removed the bit that verifies the suggestions against the IDE's code model. So sometimes it'll suggest I call a method, I'll press Tab with trepidation, and then my brand new code will turn red because that method doesn't actually exist. Great fucking job. A+.

I think I first used an Internet search engine in 1999 or maybe 2000, and the concept has always been pretty clear. You type in a query. You get back a list of results, where each one shows you: a) the title, b) a snippet from the page that shows how it relates to your query, and c) the URL.

DuckDuckGo has been my go-to search engine for over a decade, but now, many of the results have AI-generated nonsense in lieu of the usual snippet. I don't know if this is the fault of DDG or the underlying APIs they use (like Bing); probably the latter.

The reason this stands out to me is because on multiple occasions, the AI-generated blurb will promise that the page has the thing I want on it, and then I click the link and realise that it was a lie.

... Who does this help, exactly?

Out of all the software and tech products I use regularly, I have come across precisely one "AI" feature that I can say actually makes my life better.

I tried to put my scepticism aside and give "Apple Intelligence" (ick) a fair shot, since I find them marginally more trustworthy than a lot of the other tech firms when it comes to privacy.

There is some dark magic that their Photos app is now doing which allows you to type free text descriptions into the search box. It's not perfect by any means, but it is useful and scarily effective. Ironically, although they announced it in the original Apple Intelligence keynote, it's one of the few things that they haven't really continued harping on about.

The rest...? Well, being able to turn my phone into a heater by generating incredibly unhinged emoji in the Apple house style is certainly a fun party trick, but I can't say it's useful.

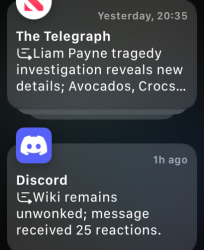

And I have notification summaries on because they're often incredibly funny, but I don't think that's the use case they were going for. Much of the time, it just mangles the notification contents.

Ivory (the Mastodon/Fediverse client I use) and Bluesky will both send me notifications whenever someone interacts with one of my posts, which include the post text. The summaries will often end up mashing up the other user's name with the contents of the post.

I found one incredible example in my screenshots; I posted this on Bluesky last year:

*cutely violates the GDPR*

*avoids fines by making puppy dog eyes at the Information Commissioner*

Someone liked it, and someone else followed me around the same time. iOS summarised these two into: "Bluesky: Pup Nyx violated GDPR; Stevie has followed you."

But hey, if I'm tired of GenAI tech... I can still refuse to use it and try my best to not think about it. Right?

Please Get AI Imagery Out Of My Face

Last October, I went to Dublin Zoo, where they were preparing for their night-time event, Wild Lights: 'A Journey Through Time'.

Visitors will be transported across time as they discover the history of our planet through the ages, there’s everything from pre-historic megafauna to life-changing first inventions and even beautiful works of art from the Renaissance, all in the form of bright and colourful, eye-catching lanterns!

This was two weeks before the start, and they were setting up the props around the park. The lanterns were kinda cool, even if they looked rather unsettling in the daytime.

Unfortunately, they'd also decided to 'enhance' the theming using some of the most brazen AI slop I've seen yet.

What on earth is going on here? Someone gave enough of a shit to manually add the text, but either didn't notice or didn't care that the plane is totally broken. The propeller is my favourite part. It's so bad.

This one is particularly bizarre. The old-timey poster at the top is obviously an computer-generated pastiche of Coca-Cola advertising. On most of the AI imagery, they did replace the text -- but they didn't bother doing that with the bit on the left that looks like it just says "Cum" in a cursive font, 6 times in a row.

Then there's the cube in front of the world's biggest mailbox, with automotive-themed posters on it. Those are not actually GenAI output -- I have no idea where they originated, but there's various shops online selling those.

And speaking of shops... Anywhere in Scotland that gets even the faintest whiff of tourism will have souvenir shops. The Royal Mile in Edinburgh is the worst for this by far, but there's quite a few of them in Glasgow too, often combined with other generic fare and whatever's trendy at the moment, like fidget spinners and disposable vapes and now Labubus.

Do you want to buy a gaudy T-shirt, a bong, a tartan plushie and a, uh... *checks scribbled writing on paw* "Big Into Energy" all in the same transaction? They've got you covered.

They've now, of course, begun selling AI slop too. Do you want to buy a framed picture of an anthropomorphic cow that's part of some alternate-universe version of Celtic Football Club that's sponsored by "Defbef" instead of "Dafabet"? Of course you do, ya wee daftie. Get your otter-themed £10 out.

Please Get AI Text Out Of My Face

It's not just imagery though, I'm also really sick of AI-generated text. There's this specific, cloying tone that feels like the written equivalent of nails on a chalkboard. Things get split up into essay-style formats when they have no business being essays. Overly verbose bulleted lists, often with an emoji icon attached to the start of each point. Everything gets a Conclusion.

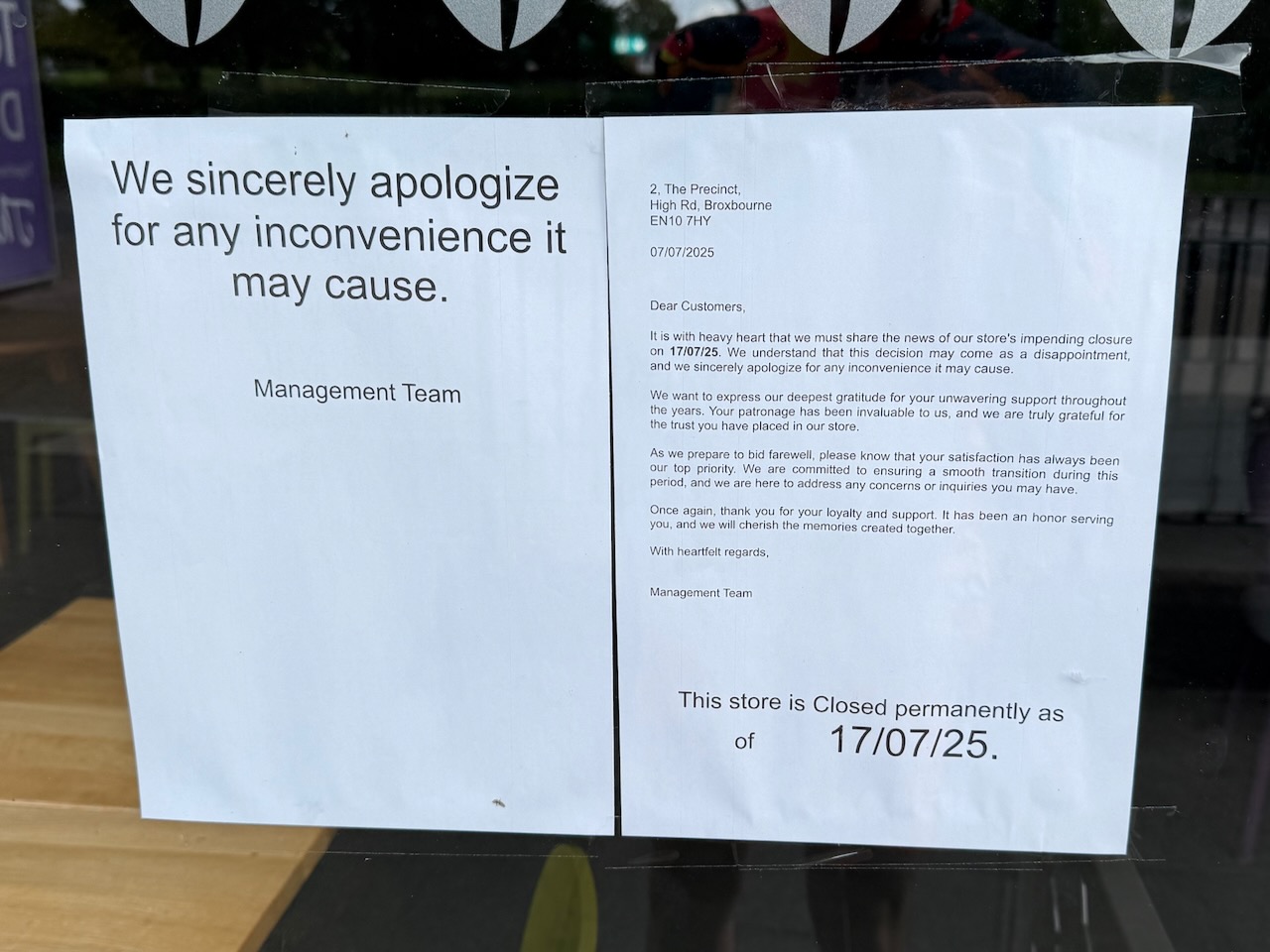

A Costa Coffee near my friend's house recently closed down, and they used two sheets of A4 paper to print out a formal letter, complete with the shop's address, but without actually mentioning the name of the shop anywhere.

Dear Customers,

It is with heavy heart that we must share the news of our store's impending closure on 17/07/25. We understand that this decision may come as a disappointment, and we sincerely apologize for any inconvenience it may cause.

We want to express our deepest gratitude for your unwavering support throughout the years. Your patronage has been invaluable to us, and we are truly grateful for the trust you have placed in our store.

As we prepare to bid farewell, please know that your satisfaction has always been our top priority. We are committed to ensuring a smooth transition during this period, and we are here to address any concerns or inquiries you may have.

Once again, thank you for your loyalty and support. It has been an honor serving you, and we will cherish the memories created together.

With heartfelt regards,

Management Team

They could have just written the ChatGPT prompt on a sticky note and saved everybody the hassle.

I've been trying to think of a good way to explain why this bugs me so much. Why do I feel so offended whenever I see a farewell letter or an announcement or a meme generated by an AI model?

And I think it's because of the lack of intentionality. If I spend 10 minutes in an image editor to make a shitpost by mashing up some stock photos, or do a goofy little MSPaint drawing, then the result may be silly and crude, but everything in it was something I added on purpose.

Last year I made this absurd ADHD meme formatted like a fake advertisement: Are you too well-organised? Do you need mystery in your life? I will misplace your stuff. Guaranteed.

Now, I could have gone and asked ChatGPT "here's a picture of my fursona, make an advert for a service where he'll lose your things because he has ADHD", and it'd probably give me something passable. I assume the training data includes a lot of marketing material, so it might come up with a way to riff on ad tropes that I hadn't thought about. I can't draw, but a GenAI model could even come up with an image of me misplacing an item, and if I'm lucky, all the anatomy would be correct.

But once again, there's no intentionality in that, and I wouldn't feel comfortable saying that I made it. A big part of the reason that this post is funny is that you can see just how much work I put into this joke!

I've had some fun getting Apple's Genmoji to generate ridiculous emoji and seeing what I can get the model to spit out, but it still doesn't feel like something I made. I pulled the lever on the slot machine until I got an output that I liked. That's not my idea of creativity.

Likewise, back to text... Did the management team of the defunct Costa Coffee in Broxbourne really have my satisfaction as their top priority, and find my patronage invaluable? I highly doubt it, I think that's just what the model said was statistically likely to appear in a generic farewell letter. ¯\_(ツ)_/¯

(And what the flying fuck are they ensuring a smooth transition to? The coffee machine in the Sainsbury's Local next door? Did they even read the letter before they printed it out? Probably not.)

And yeah. It's hard to explain, but there's this 'vibe' to AI-generated text that I can spot from a mile away now. It's all overly prosaic and feels like it's come out of a corporate PR department where five people have tried to workshop the perfect, least offensive wording -- which is deeply hilarious when it shows up in settings that are completely unfitting for that.

I recently got a like on the furry meetup/dating/hookup app Barq from someone who had obviously gotten a LLM to write their profile, including the 'Sexual Preferences' section. I won't quote all of it, but just the first part:

I'm an affectionate and passionate fox who loves deep connections and taking things slow — savoring every touch, every moment, and every spark.

✨ I believe intimacy is about more than just passion — it's about trust, closeness, and enjoying the little details together.

Now maybe I'm just a Luddite, but I feel like this is one of the places where expressing your actual personality would make sense... Are you going to get into bed with someone and then pull out your phone to ask GPT-4o how to dirty talk?

And to be clear, this wasn't a fake profile -- they were an actual person that I saw walking around the convention we were both at!

In a similar vein: I know somebody who runs a room party (read: orgy) at a specific event each year, and who asks attendees to submit proof of a recent negative STI test beforehand. The amount of headings, bullet points and waffle in this year's message made it obvious that they'd decided to delegate that responsibility to a computer.

Why we're doing this:

- To ensure everyone can enjoy the party safely and comfortably.

- To promote responsible health practices within our community.

That's one of my beefs with people using Generative AI for text and images -- it just gives off the aura that you don't care. And even if you do... well, I want to read what you want to say (typos and imperfections and all), not a computer's extrapolation of it!

My other problem with AI-generated text is less emotional and more pragmatic. This one is especially troublesome when researching anything technical online, but it's now become far more difficult to find trustworthy info.

Don't get me wrong, we've had SEO sludge websites cluttering up search results for a long time, often with scraped content from sites like Stack Overflow. But GenAI has been the best thing that could have happened to them.

Any technical query you can think of will now bring up tons of LLM-generated nonsense. If I see a page from a website I don't recognise, and it was published in the past 1-2 years, I have to try and figure out if it's:

- Written by somebody who knows what they're doing

- Written by somebody who knows what they're doing, but who's using GenAI to save time

- Written by an AI model

LLMs are really, really good at sounding human-like enough to pass the initial vibe check -- after all, they've been trained on tons of actual writing, so of course they'll replicate that. They're not always wrong, but they're wrong often enough that I don't want to just trust their results on a topic I'm unfamiliar with!

When competent people use them in well-intentioned ways and check all the results, then there's nothing wrong with that in theory, but I've seen so much LLM bullshit that I automatically go on red alert whenever I see something that looks like GPT output.

And this brings me to my final point: why I can't get myself to care about GenAI coding assistants.

As a reasonably experienced software engineer, I'm sure I could find ways to speed up some of the more drudgy parts of my work using them. I'm under no illusions that they are a panacea (I recently saw a "vibe coded" repo where Claude fixed the failing build by deleting all of the actual code) but I have seen examples where people have actually been productive by leveraging their strengths.

However. This just doesn't outweigh the negatives for me.

There are practical reasons. I don't want to become reliant on tools like Copilot or Claude which are exclusively cloud-based, have usage limits, and shaky economics that will probably see prices skyrocket once the AI money fountain dries up.

I'm certainly not the only one that sees the obvious tells of LLM-generated text and immediately assumes I'm being lied to, and I don't want my own work to be surrounded by the AI miasma.

But most of all... I'm just tired as fuck of having AI shoved down my throat everywhere. Refusing to use it for that reason might seem petty, and I know losing my business isn't going to hurt any of the big AI companies, but I can have little a pettiness if I want. As a treat.

I Continue To Be Tired

For shits and giggles, I asked Apple Intelligence's "writing tools" to generate a summary of this post:

The author expresses frustration with the pervasive and often unnecessary integration of generative AI into various aspects of life, from software engineering to everyday products. While acknowledging the potential benefits of AI, the author finds the constant push for AI features, coupled with the prevalence of low-quality AI-generated content, overwhelming and detrimental. The author longs for a break from the AI hype and a return to more meaningful and user-centric technology.

Yeah, I guess that's pretty accurate, even if it did miss out some of the most important points like the ads at Crufts. 🥜

I don't know where we go from here, though. I'm hoping the AI bubble will burst so that we can stop trying to shove "✨ Summarise" buttons and GPT-4 prompts into everything, and get more sensible uses of AI and machine learning tech. Is that unrealistic? Maybe.

But the vibes (coded or otherwise) are absolutely rancid at the moment. It's really not too dissimilar from the cryptocurrency space: The underlying tech is interesting, there are plenty of use cases for a viable alternative to regular currency and banking, but all the oxygen in the room is being sucked up by speculation, grifters and "we need to put tuna on the blockchain!".

If you got this far, then thank you for reading my rant. I've tried to avoid coming across as too much of a doomer, though I'm unsure whether I've actually succeeded there or not :p

Previous Post: One Dog v. the Windows 3.1 Graphics Stack