One Dog v. the Windows 3.1 Graphics Stack

Wherein I learn too much about VGA hardware and generate some really cool glitch art while I try to fix somebody else's fix for a video driver that's older than I am.

I'm a bit of a retro tech enjoyer, but I'm also pretty bad at it -- I don't have the space or the motivation to try and acquire actual old computers. Playing with 86Box/PCem is pretty fun, but it's not quite the same.

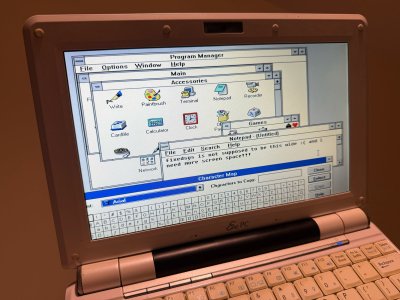

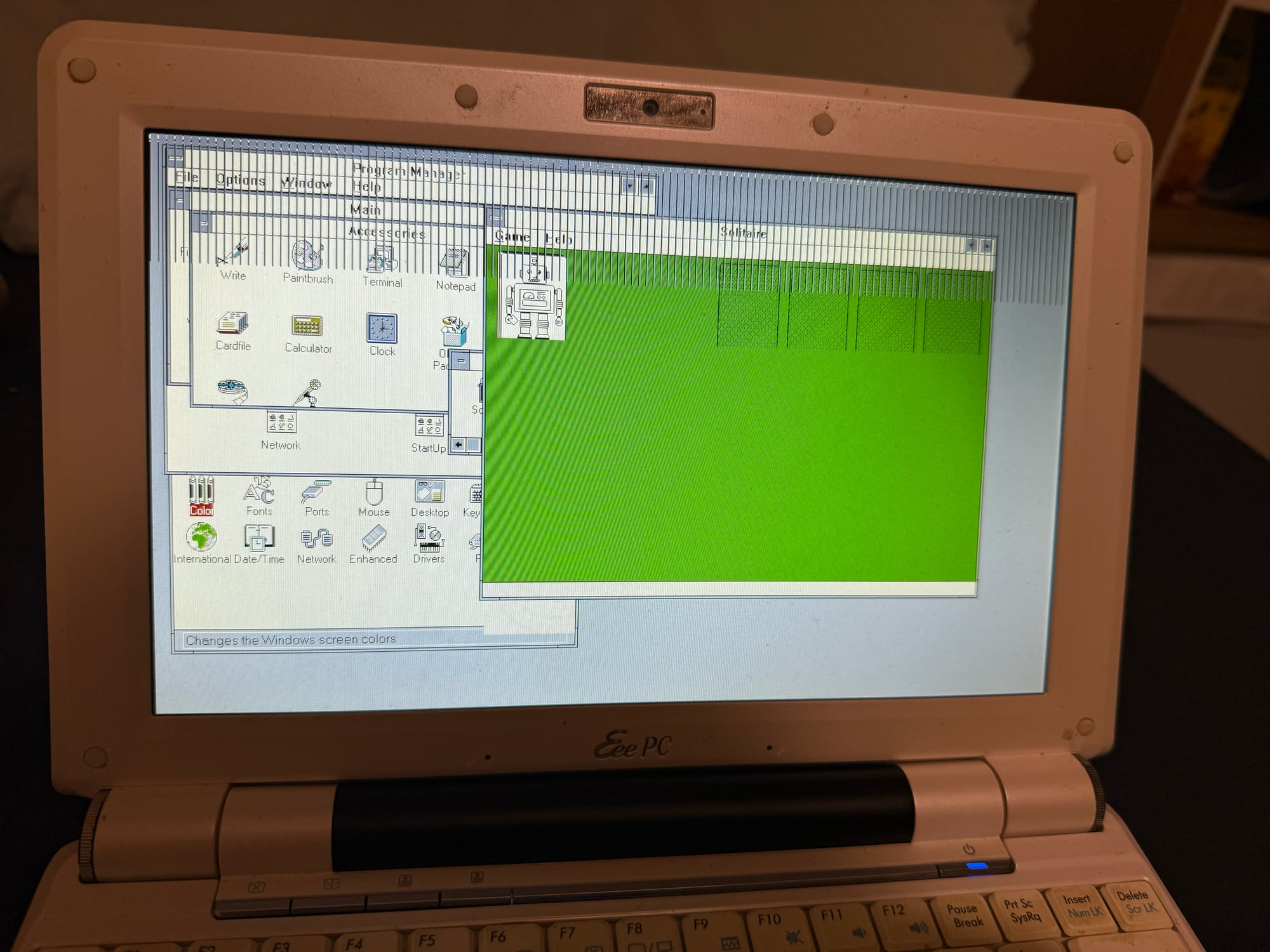

So, instead, I make do with what I have. And the most ridiculous x86 machine I own is the Asus Eee PC 1000H, a netbook that I got in 2008 when that category was still new and exciting. It's borderline useless nowadays (it can't even run most up-to-date Linux distros due to its lack of x86_64 support), so sticking weird and anachronistic OSes on it is one way to keep it relevant!

I'd like to write a full-fledged blog post about these adventures at some point, but for now I'm going to focus on one particular side quest: getting acceptable video output out of the 1000H when it's running Windows 3.11 for Workgroups.

By default, Windows 3.x renders using the standard "lowest common denominator" of video: VGA 640x480 at 16 colours. Unfortunately this looks awful on the Eee PC's beautiful 1024x600 screen, and it's not even the same aspect ratio.

But how can we do better? The 3.11 installer includes a smattering of drivers for long-obsolete video adapters, but they didn't have the prescience to support the Intel GMA 950 in my netbook. (For shame, Microsoft. For shame.)

There's an included 'Super VGA' driver that ostensibly supports up to 1024x768 at 256 colours, but it doesn't work, for... reasons that we'll go into, even though you'd think that a 2008 machine would surely be able to do SVGA. If I try to use it, I'll just get an error and Windows will fail to start.

The Horrors of IBM PC Video

Nowadays when we hear 'VGA' we usually think of the funky little blue analogue connector, or maybe just the 640x480 resolution itself. These are just aspects of VGA, which was a very specific video controller designed by IBM in the 1980s.

'Super VGA' must be a better version, right? Well, yes, and no. As I understand it, at the time, SVGA was just an umbrella term for anything more advanced than plain old VGA - but not a standard in and of itself. So lots of SVGA cards could do better resolutions and colour depths and refresh rates, but each piece of software had to implement support for each individual card.

This leads to the mind-numbing situation we get with the Windows driver. From the readme for this driver's standalone release, we get the following list of supported vendors:

- ATI VGA series, including Wonder, Wonder Plus and Wonder 24XL

- Cirrus Logic VGA (6420, 5420 series)

- Oak Technology VGA (077 series)

- Paradise VGA, including 1024 and Professional

- Trident VGA (8900C series), including Trident Impact

- Tseng VGA (ET4000 series), including a whole bunch of other cards with the same chips

- Video Seven VGA, including FastWrite, 1024i, VRAM and VRAM II

- Western Digital VGA

Obviously, my mid-2000s Intel video adapter is not among these. There was no real reason for Intel to use the same proprietary extensions as Trident or Oak or Video Seven. So we're boned, I guess...

They Should Make A Standard

Good news! They did! ... Too bad it came too late to be relevant to Windows 3.x.

VBE (VESA BIOS Extensions) is a generic interface that lets software talk to video adapters and do things that are beyond the capabilities of plain old VGA, and in theory it's exactly what we need. (And yes, that's the same VESA that's responsible for the monitor mounts.)

BearWindows has released VBE9x and VBEMP which allow Windows 9x and NT respectively to use VBE, which both work pretty well. There's no 3.x version though.

There is also SVGAPatch, available from japheth.de, which patches Microsoft's 256-colour Super VGA driver to use VBE. The result is beautiful...

...when it works. You see, one of the headline features of early Windows was its compatibility with MS-DOS software. You could just pull up a DOS prompt in a window, and with 3.1's Enhanced Mode, you even got mostly-seamless multitasking of DOS applications alongside graphical Windows ones. This was a Big Deal™ in those days!

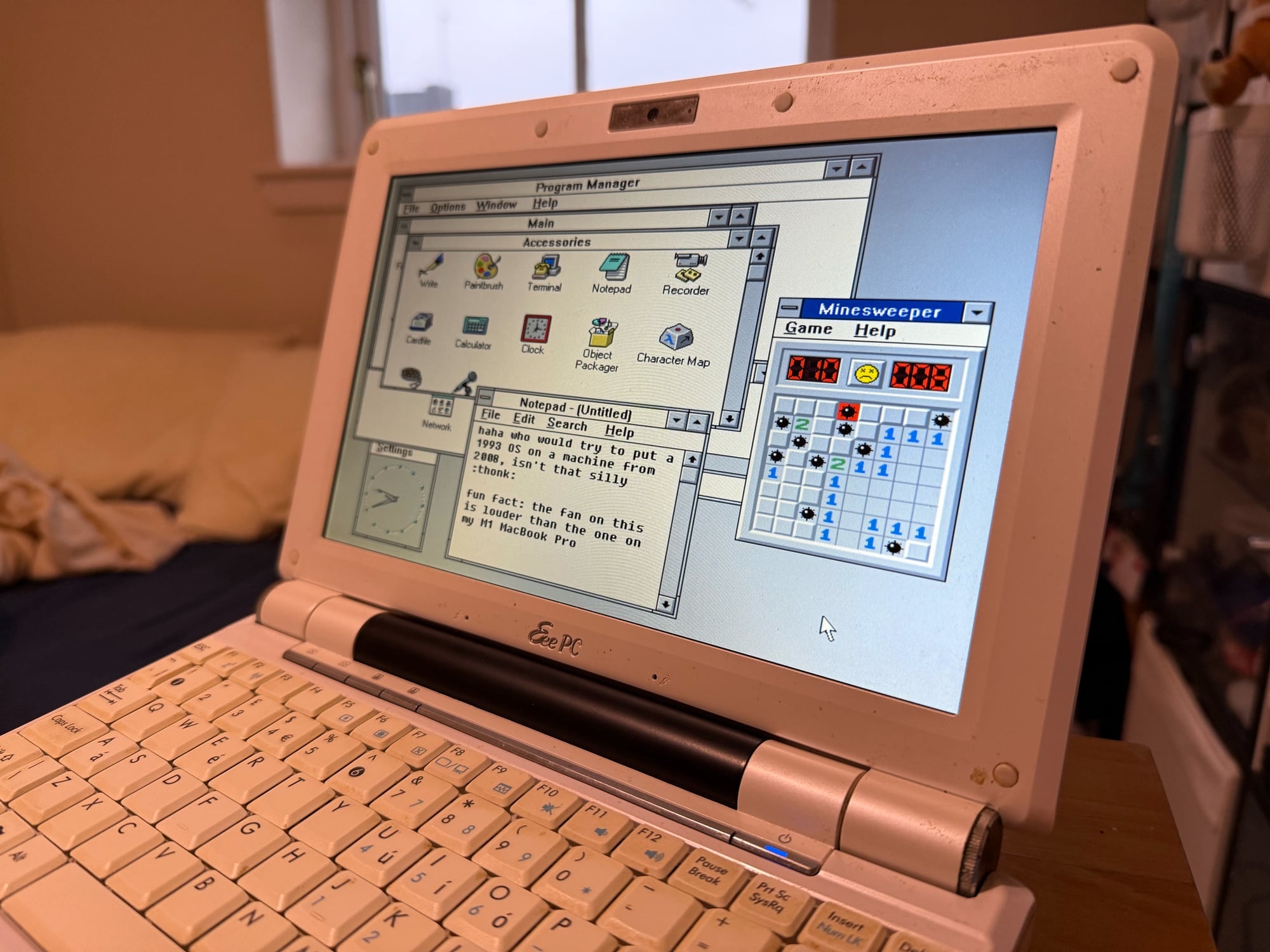

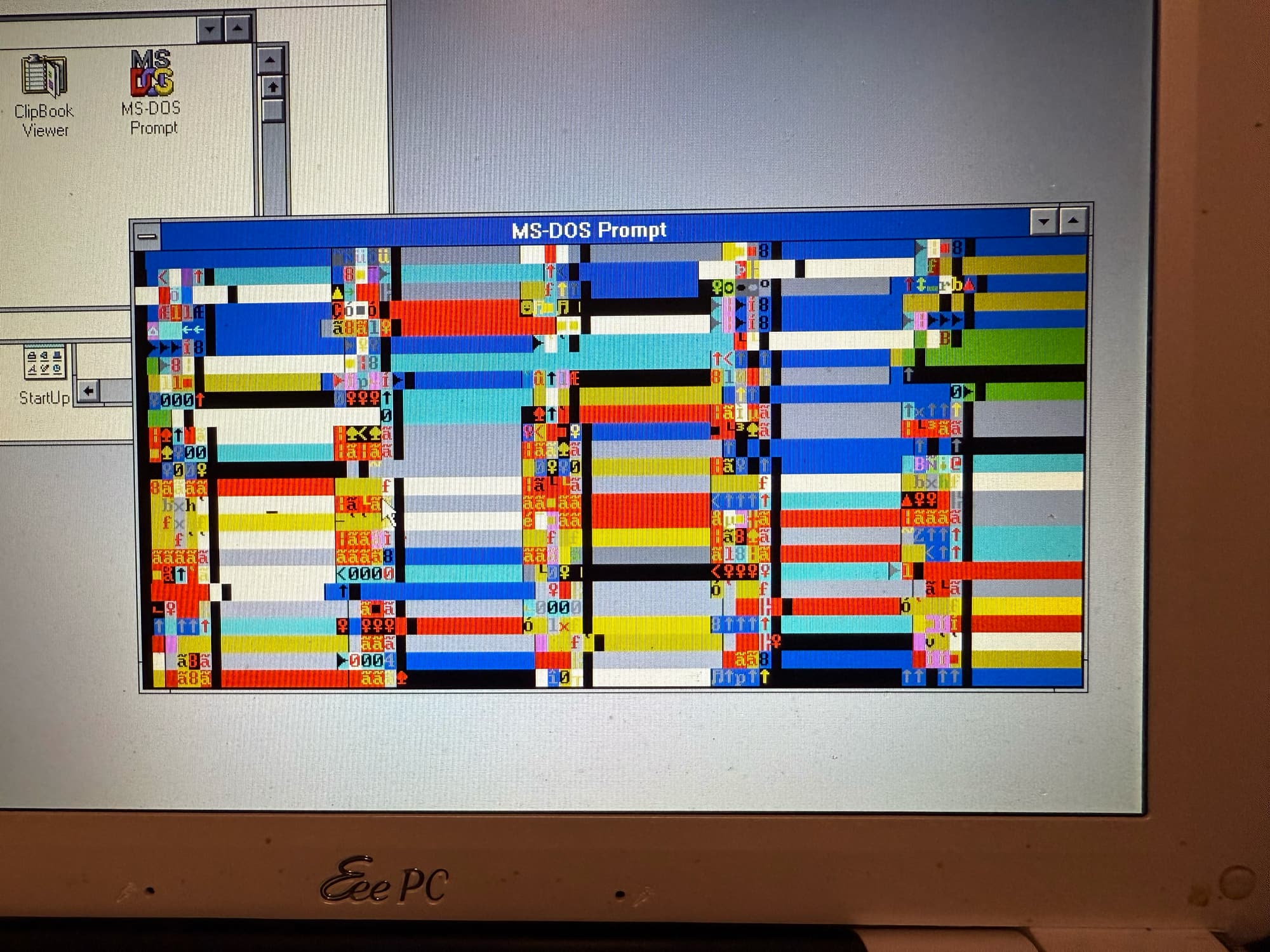

Unfortunately, SVGAPatch doesn't play nicely with this, and I wanted to figure out why. Entering full-screen mode and then returning to the Windows GUI will leave you with a corrupted screen. In some cases, opening any DOS prompt, even if it's in windowed mode, will cause it. Here's what happened when I opened a windowed prompt.

(Hank Hill voice) That prompt ain't right!

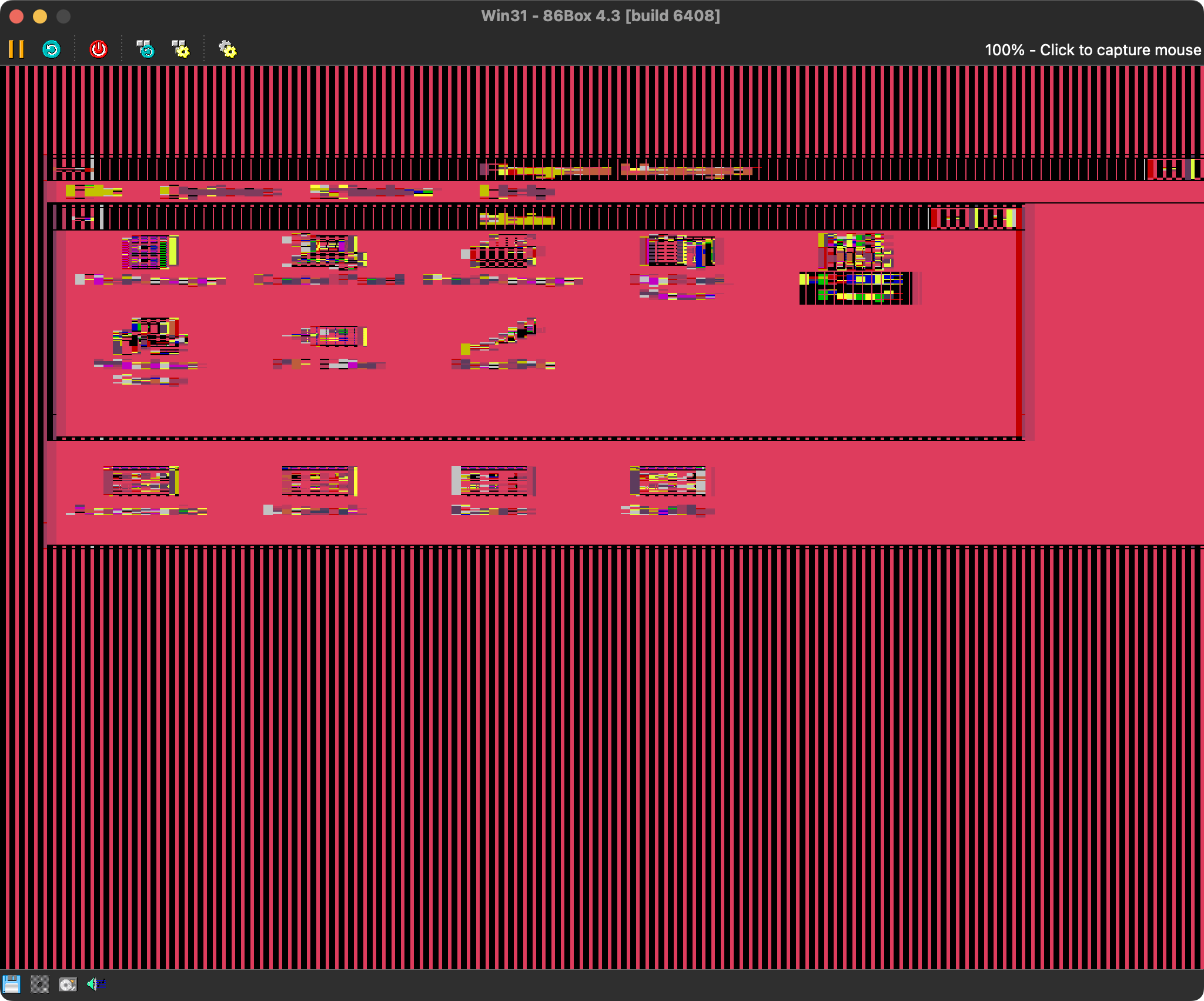

There's clearly some sort of state management issue; I've tried it with DOSBox, 86Box and my Eee PC, and interacting with DOS prompts will reliably break the driver on all three (but in subtly different ways).

So, let’s analyse how this works, with my very limited knowledge of the PC architecture. Can I find the problem, and maybe fix it??

Side note: Halfway through working on this I came across PluMGMK/vbesvga.drv which is a brand new driver that even supports true colour modes. This is, in all honesty, a far better approach than mine - but I was invested and wanted to see how far I could get with Microsoft's code.

The Windows 3.x Hellscape

To grok what’s going on, we need a cursory understanding of how Windows 3.x works in its “Enhanced Mode”, as an OS on top of MS-DOS.

Rather than trying to explain it myself, I’ll link to a 2010 post from Raymond Chen’s blog: If Windows 3.11 required a 32-bit processor, why was it called a 16-bit operating system? It’s good reading, but this is the most important bit for our purposes:

With Enhanced mode, there were actually three operating systems running at the same time. The operating system in charge of the show was the 32-bit virtual machine manager which ran in 32-bit protected mode. As you might suspect from its name, the virtual machine manager created virtual machines. Inside the first virtual machine ran… a copy of Standard mode Windows.

This funky approach is what allowed Windows 3.x to run DOS applications without each app tying up the entire machine.

The Display Driver Hellscape

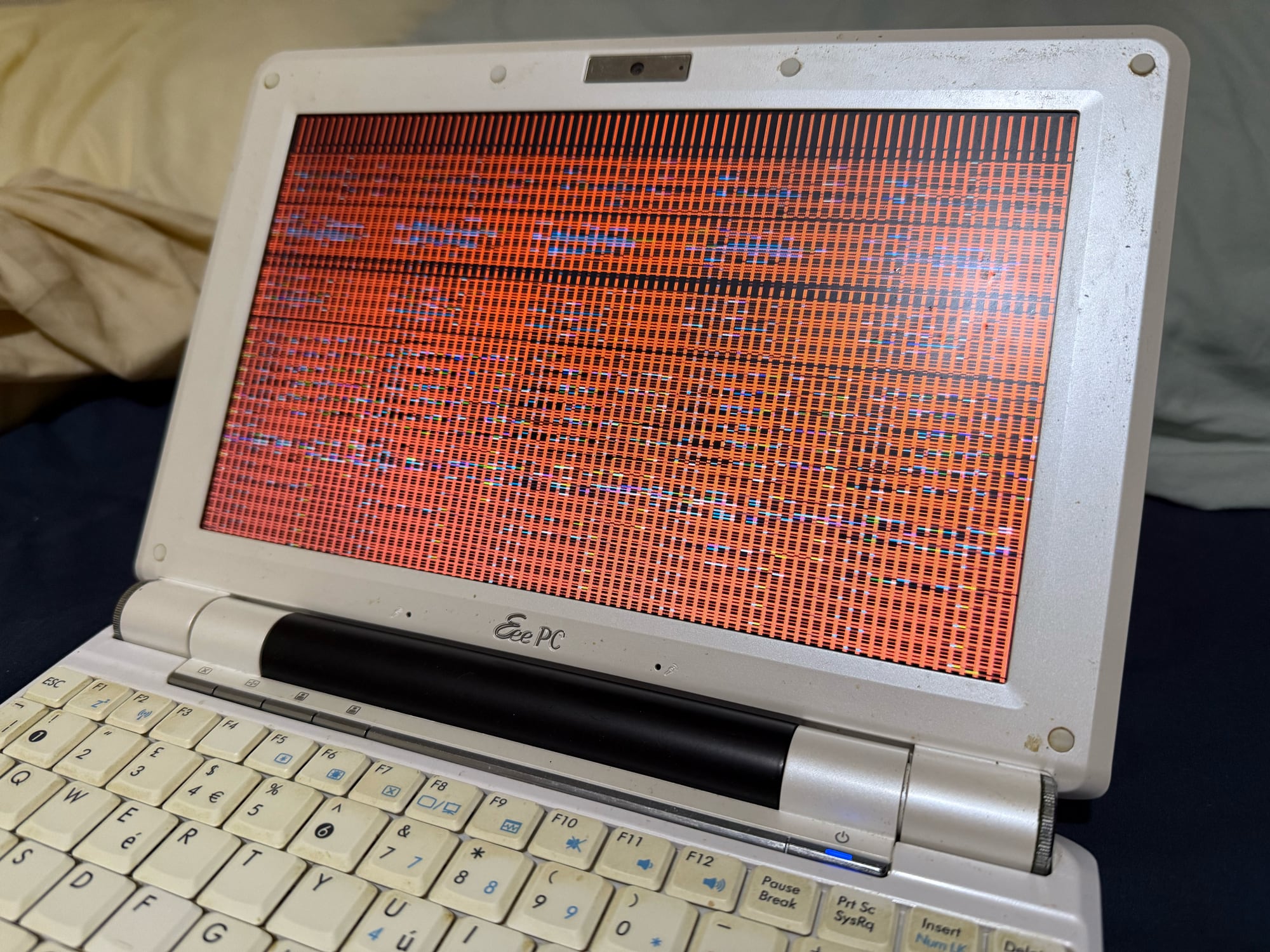

In Windows Setup, you get to tell it what video adapter you're using. You’d probably assume that this is choosing one driver. Nope!

When you pick an option from this list, it will…

- Install all the required files from the floppy diskettes (how quaint!)

- A grabber

- A display driver

- A virtual display device

- Required resources such as fonts

- Modify your

SYSTEM.INIto tell Windows to use the specified drivers - Add any other INI entries required

The different 256-colour SVGA entries are actually the same underlying drivers, but with different INI entries to set the desired resolution and DPI (96dpi for 'small fonts', 120dpi for 'large fonts').

More critically however, there are three different components here that you could all think of as a sort of driver. As I understand them…

The Grabber seems to be responsible for rendering the windowed versions of DOS apps. This is the one I’ve investigated the least.

The Display Driver runs inside the main Windows VM, and is responsible for setting up the hardware and rendering the GUI. In fact, in 3.x, each of these included its own implementation of much of GDI (the API for drawing).

The Virtual Display Device (VDD) runs as part of the underlying virtual machine manager, and acts somewhat like a multiplexer for the video hardware. If a DOS app is full screen, its commands are directly sent to the ‘real’ VGA adapter; otherwise, they’re emulated by the VDD.

There is a whole lot of nasty state synchronisation logic in the VDD that is likely related to my problems.

Interestingly, however, the SVGAPatch tool doesn’t alter the SVGA VDD at all — it only touches the display driver. Could that be the issue? 🤔

To continue, I’ll need to understand the display driver, the VDD and the undocumented patches made by SVGAPatch.

Gathering Information

As far as I can tell, this was a bit of a dark art back when 3.x was current, and it’s still rather difficult to find any info about all of these systems.

I learned a lot from this blog post on ‘OS/2 Museum’: Windows 3.x VDDVGA, and aside from that, I also had the Windows 3.1 DDK (Driver Development Kit), which is archived on WinWorld.

The DDK includes the following bits which are relevant to us:

- Display Driver source: VGA, IBM 8514, Video 7, 16-colour SVGA

- VDD source: VGA, IBM 8514, Video 7, 16-colour SVGA

- Grabber source: ... Basically all of them

- A pitiful amount of written documentation

Noticeably absent however are the 256-colour SVGA display driver and its associated VDD. I don’t know if these were included in a later DDK version, or if they just never left Microsoft at all.

I did grab the Windows 95 DDK to see if it contained anything relevant, but it wasn’t particularly useful.

All hope is not lost though, the 3.1 DDK is still helpful. I’m assuming that there are significant amounts of shared code between these drivers. We just need to wade through all the macro-laden assembly.

Reversing the Display Driver

Step 1: Load the Code

Disassembling 16-bit x86 code is pretty weird; I'm still not quite used to segments, as someone who's primarily looked at PowerPC and ARM code before. Still, it's doable.

IDA can load all the binaries with no issues, but unfortunately, not in the free version which is arbitrarily restricted to PE files. Ghidra can load the .drv display drivers, but not the VDD as it's actually a VxD.

I tried oshogbo/ghidra-lx-loader at first to load the VDD in Ghidra, but it fails. Then I tried yetmorecode/ghidra-lx-loader, which works, but at the time of writing you need to build a PR to get it to work with the latest Ghidra.

$ git clone git@github.com:yetmorecode/ghidra-lx-loader.git

$ git fetch origin pull/7/head:buildfix

$ git switch buildfix

$ GHIDRA_INSTALL_DIR=~/Downloads/ghidra_11.2.1_PUBLIC gradle buildExtensionOnce built, go to File > Install Extensions in Ghidra, add the built extension zip file from dist/, and restart Ghidra.

You can now import the vddsvga.386 file and it will be detected as a 'Linear Executable'. However, it's nowhere near as seamless as in IDA...

This VxD contains both 32-bit code and 16-bit code, but as far as I can tell, Ghidra expects the entire file to be either one or the other. So if I import the file with the default 'language' of 32-bit, it'll fail to decode the real mode initialisation code in the last segment. OTOH, if I switch it to 16-bit, then it'll fail to decode the vast majority of the code in the file.

I'm probably missing something due to my lack of familiarity with x86 and its tooling 🤔

Step 2: Match Things Up

After loading up svga256.drv (both the original Microsoft version and the SVGAPatch-modified version) and vddsvga.386, I was ready to begin.

I began with the .drv file. Conveniently, this one has a whole bunch of exported functions like GETCHARWIDTH, STRETCHBLT and VIDEOINIT_ATI, so I thought it'd be good to go through these and compare them to the source from the DDK, hopefully getting to name some other functions and global variables in the process.

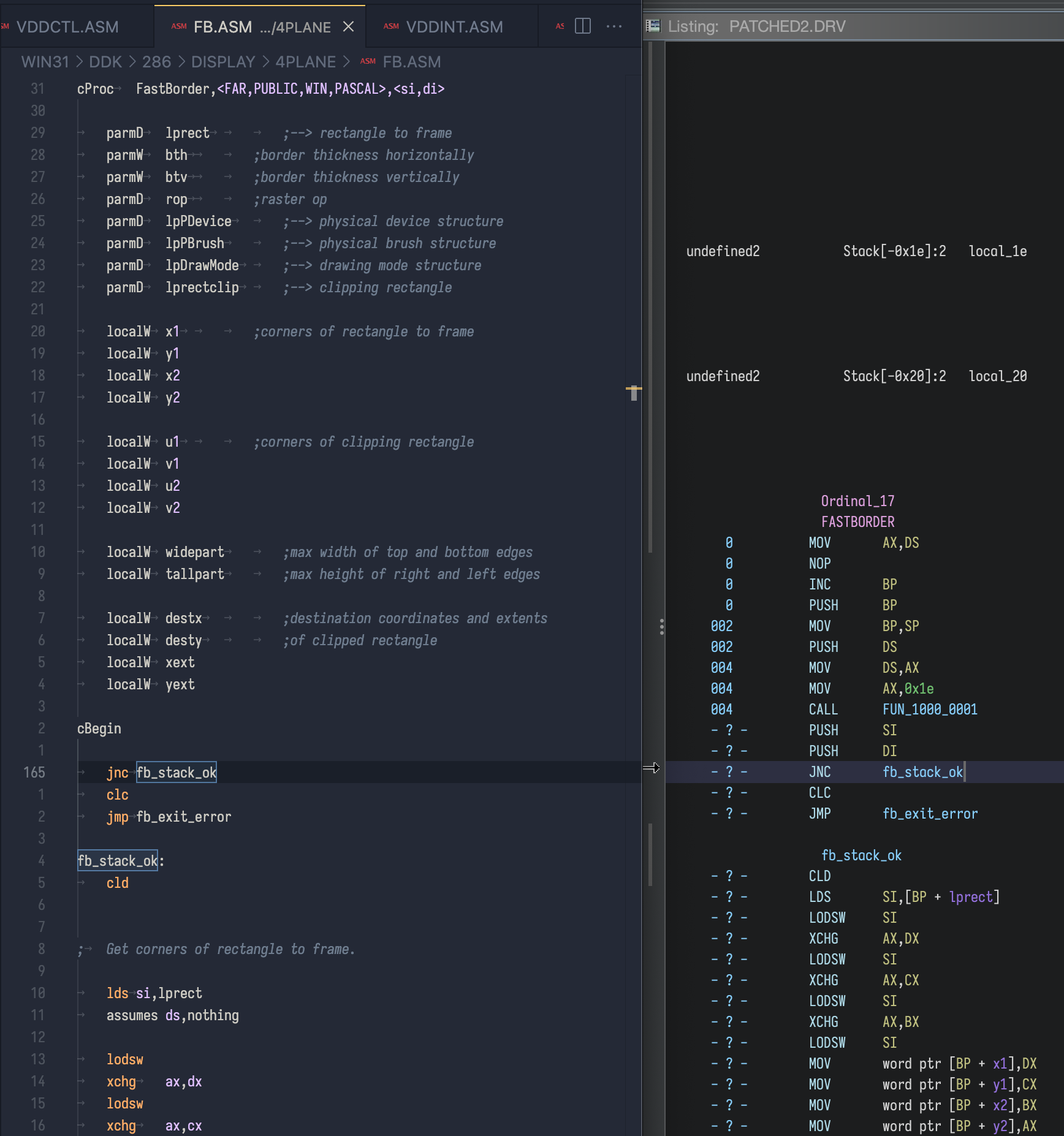

Since FASTBORDER is the first one in the file, I started looking at that - it corresponds to WIN31/DDK/286/DISPLAY/4PLANE/FB.ASM in the DDK.

This is when I found out just how heavily this source code relies on macros...

On the left is the DDK's source for FASTBORDER, and on the right is how it appears in Ghidra (after I've manually applied labels and variable/parameter names).

This is using cProc and associated macros, which seems to be a way to make defining C-compatible procedures more ergonomic... but it's not documented anywhere that I can see.

I can read the source for these macros, but they're somewhat inscrutable to say the least, with no comments and almost no indentation. A representative code sample:

if ???+?po

if ?chkstk1

push bp

mov bp,sp

else

if ???

enter ???,0

else

push bp

mov bp,sp

endif

endif

endifFor my purposes, I don't really need to understand this, I just need to know that I can skip past the initialisation guff every time I see it in a cProc procedure.

Likewise, there's arg and cCall macros used for calling these, but those are fairly self-explanatory:

arg <lpPDevice,destx,desty,ax,ax,ax,ax,xext,yext,rop,lpPBrush,lpDrawMode>

cCall BitBltThis just pushes all of these arguments onto the stack, and calls the function.

So FASTBORDER is essentially the same in svga256.drv as it is in the VGA driver that I have source for; that's promising!

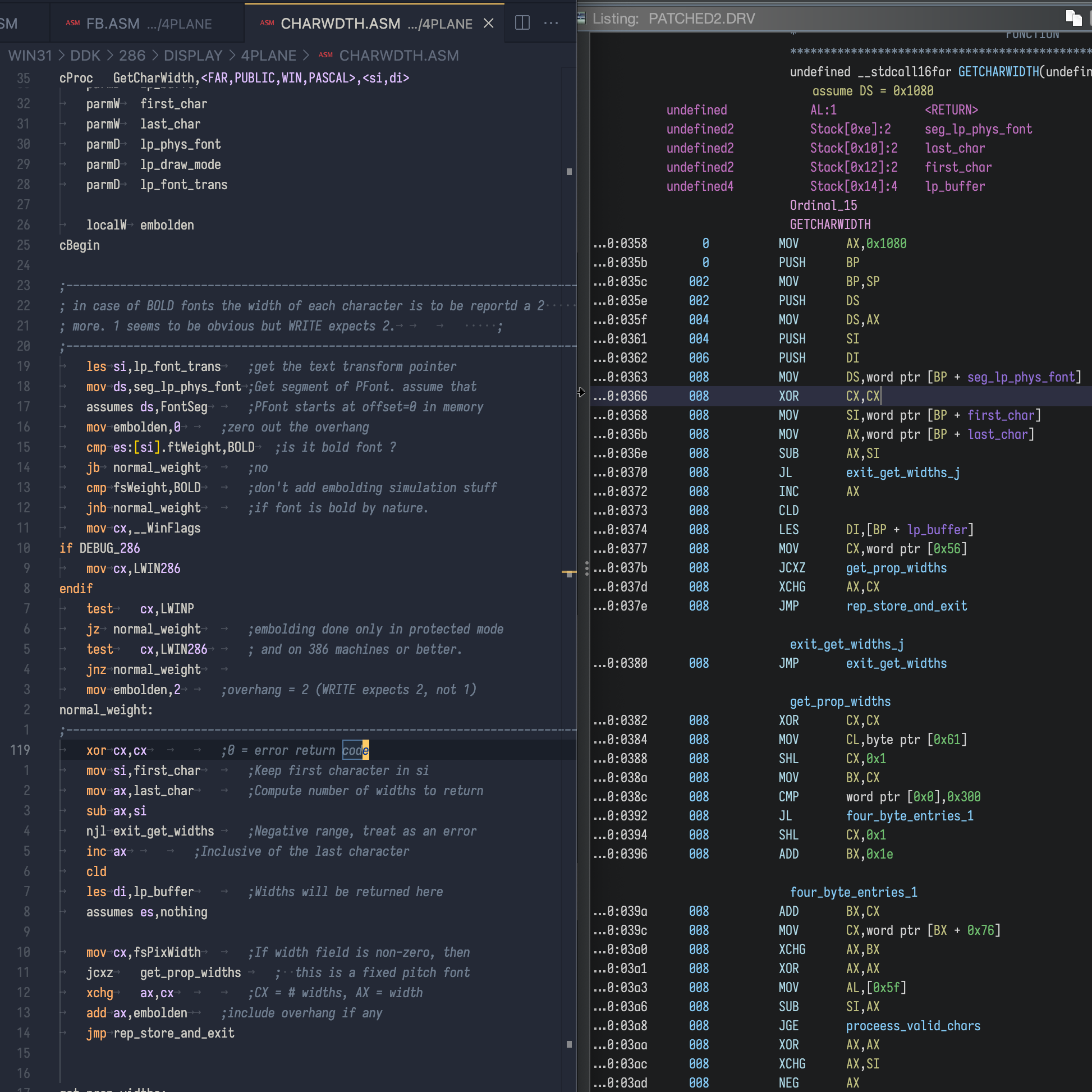

The next function is GETCHARWIDTH, which is used to retrieve the widths of characters in a font. This is where I reach a new issue - this function isn't the same.

Also, just to make my life a bit harder, the proc has 7 parameters but not all of them are used -- meaning that I need to be extra careful about making sure I name them correctly in Ghidra.

Turns out, there's some functionality in the VGA driver that adjusts the width of bold fonts. This does not seem to be present in svga256.drv!

I checked all the other implementations in the DDK's various drivers, but the VGA one is still the closest match. The Video 7 driver doesn't include this feature, but there are other differences in the code.

I wondered if this feature was added later, and maybe the SVGA256 driver was forked from an older version. There are some changelog-esque comments in many of these files, but MS don't seem to have done a great job at keeping them up to date :(

; Created: Thu 30-Apr-1987

; Author: Walt Moore [waltm]

...

; History:

; Monday 3-October-1988 13:52 -by- Ron Gery [rong]

; moved into fixed code segment for fonts-in-EMSThis is from the header for the VGA driver's CHARWDTH.ASM file.

It's very slightly different from the equivalent file in the PC-98 video driver, but both of these have identical dates and history entries, which suggests that I just can't put much faith into those comments.

Anyway, moving on...

The next export after this was REALIZEOBJECT, which is the Windows GDI function that takes an object (pen, brush or font) and processes it to make it suitable for the driver - for example, choosing the closest available colour to the one requested by the application.

This one gave me a little more grief because it was my first time encountering a jump table, but it was doable. However... the colour handling logic is different, of course!

In the VGA driver's source, we see this logic with some curiously commented out code:

realize_pen_10:

xchg ax,cx ;Set pen type into CX

lea si,[si].lopnColor ;--> RGB color

; call convert_index ; convert the index into DH if the

; color is actually an index

; jc realize_pen_20 ; it was an index

call sum_RGB_colors ;Sum up the colorWhereas in SVGA256, the call to sum_RGB_colors has been replaced by ... a manually-inlined version of it? 🤔

Also, while the initial dispatch code is identical to the VGA driver's, the implementation of everything from realize_brush onwards is almost identical to the one from the Video 7 driver. Odd.

After this, I realised that looking further at the GDI functions probably wouldn't be super helpful. It's helped me to get my bearings in this codebase, but what I really care about is how it interacts with the video adapter.

Step 3: Initialising the Video Adapter

I'd previously mentioned that when you select a type of video adapter in Windows Setup, it creates some INI entries in SYSTEM.INI.

If I pick Super VGA (800x600, 256 colours, small fonts), I get the following entries:

[svga256.drv]

dpi=96

resolution=2And after booting into Windows, the patched driver automatically adds these extra entries...

svgamode=48

ChipSet=Tseng ET4000

LatchCapable=NoI have no clue what these mean, but luckily, since these are named with nice text strings like ChipSet, it's very easy to find the code that interacts with them within the driver.

The first one is in a function that, based on its placement, appears to be driver_initialization. I believe this is automatically called by Windows as the very first thing after the driver has been loaded into RAM.

If I look at the VGA driver from the DDK, it's got some pretty obtuse logic:

- Construct some flags based on whether we're on DOS 3.1.0 or higher, and whether we're on OS/2 or not

- Call a

dev_initializationfunction- Checks how much video memory is present, and disables a specific

BitBltfeature if it's less than 256KB

- Checks how much video memory is present, and disables a specific

- Load the

MouseTrailssetting from an INI - Mess with the function pointers to

ExtTextOutandStrBltfor reasons I don't fully understand

The Video 7 driver is a bit simpler -- it has the same flag logic, and it calls dev_initialization to assert that the driver is running on a 286 CPU or better, but it doesn't look at any INIs or mess with function pointers.

On the other hand, the SVGA256 driver we're looking at is trivial.

Translated into pseudo-C just to make it a bit easier to understand:

short ini_resolution = 0;

short ini_dpi = 96;

short driver_initialization(/* args not relevant here */) {

short r = GetPrivateProfileInt("svga256.drv", "resolution", 0, "system.ini") & 3;

if (r != 0) {

ini_resolution = r;

ini_dpi = GetPrivateProfileInt("svga256.drv", "dpi", 0, "system.ini");

return 1;

}

return 0;

}This function is untouched by SVGAPatch.

So that's useful... now, where do these get used?

The function directly after it seems to be the equivalent to physical_enable. This is where it truly gets interesting - this is what sets up the VGA adapter!

We can look at this in VGA.ASM in the DDK; this uses assembler directives to generate different code for the VGA driver and for the 16-colour SVGA driver.

The code I see in SVGA256 is obviously an evolution of the latter. Once again I'll translate it to pseudo-C to make it easier to follow.

However, first I need to go on a slight tangent...

System calls on the PC

How do you "reach out" and interact with the OS or the hardware? There are three approaches we see in this driver.

Firstly, Windows functions like SetPalette and WritePrivateProfileString are imported from DLLs, so once you include the boilerplate to tell the assembler/linker about them, you can just call them like you would a function in your own code.

Then we have interrupts, which I've represented in this pseudocode using fake functions like vga_enable_refresh(). With these, you set certain registers to specific values (depending on what you want to do), and then use the x86 INT instruction.

I've looked them up in this beautiful 90s-web adaptation of Ralf Brown's Interrupt List, which is a very useful resource - I've also linked the individual pages below where referenced.

INT 10h is the PC's standard interrupt for doing anything video-related; the contents of the ax register identify what operation you want to do.

INT 2Fh is the "Multiplex" operation, which is kind of like a miscellaneous bucket for services installed by MS-DOS, Windows, and other applications like TSRs.

Lastly, you can also just poke hardware registers directly using the IN and OUT x86 instructions. This isn't used in this particular function, but it'll show up in plenty of other places, as this is a fundamental part of working with VGA.

Tearing apart physical_enable

struct int_phys_device {

BITMAP bitmap;

char ipd_format, ipd_equipment, ipd_enabled;

};

struct ModeEntry {

char *chipSetName;

short mode;

char *modeString;

short functionID;

};

struct ModeEntry modes_800x600[] = {

{ "Tseng ET4000", 48, "48", 2000 },

{ "Video 7", 0x8000 | 105, "105", 2003 },

{ "Trident", 94, "94", 2006 },

{ "Oak", 84, "84", 2009 },

{ "Western Digital", 92, "92", 2012 },

{ "ATI VGA Wonder", 99, "99", 2015 },

{ "Cirrus Logic", 48, "48", 2018 },

{ "Cirrus Logic 542x", 92, "92", 2021 }

};

// ... plus two more for 640x480 and 1024x768

struct ModeTable {

struct ModeEntry *modes;

short width, height, _padding;

};

struct ModeTable wModeTable[] = {

{ 0, 0, 0, 0 },

{ modes_640x480, 640, 480, 0 },

{ modes_800x600, 800, 600, 0 },

{ modes_1024x768, 1024, 768, 0 }

};

short ScratchSel;

short wGraphicsMode;

short CurrentWidth, CurrentHeight;

short RequestedMode;

short FunctionID;

char latchCapableFlag;

(int, short) physical_enable(struct int_phys_device *pDevice) {

// save the original video mode

// this calls INT 10h with ax=0F00h

pDevice->ipd_format = get_current_video_mode();

ScratchSel = AllocSelector(0);

short mode = wGraphicsMode;

if (mode == 0) {

mode = GetPrivateProfileInt("svga256.drv", "svgamode", 0, "system.ini");

}

short originalMode = mode;

retry:

if (ini_resolution == 0) {

// 1020 is the id of a "not specified" error message

return (0, 1020);

}

struct ModeEntry *entry = wModeTable[ini_resolution].modes;

CurrentWidth = wModeTable[ini_resolution].width;

CurrentHeight = wModeTable[ini_resolution].height;

RequestedMode = mode;

short c;

if (mode == 0) goto SVGA_Next;

// if a mode was specified in the INI, search for it

do {

c = (entry++)->mode & 0xFF;

if (c == 0) goto tryFullSearch;

} while (c == mode);

tryMode:

if (SetAndValidateMode(c, (CurrentWidth / 8) - 1, CurrentHeight - 1)) {

goto SVGA_Success;

}

SVGA_Next:

if (RequestedMode != 0) goto tryFullSearch;

c = (entry++)->mode;

if (c != 0) goto tryMode;

// full scan failed, so the driver can't work at all

// 1010 is the ID of the "Failed to initialise" message

return (0, 1010);

tryFullSearch:

// failed to find the mode specified in the INI, or it didn't work

// so do a scan through all modes again

mode = 0;

goto retry;

SVGA_Success:

// at this point, a mode has been found

entry--;

if (entry->functionID == 2012 && special_type_2012()) {

// if certain conditions are met, switch from "Western Digital" to "Cirrus Logic 542x"

entry += 3;

} else if (entry->functionID == 2000 && special_type_2000()) {

// switch from "Tseng ET4000" to "Cirrus Logic"

entry += 6;

}

wGraphicsMode = entry->mode;

FunctionID = entry->functionID;

if (mode != originalMode) {

WritePrivateProfileString("svga256.drv", "svgamode", entry->modeString, "system.ini");

WritePrivateProfileString("svga256.drv", "ChipSet", entry->chipSetName, "system.ini");

}

// this calls INT 10h with ax=1201h, bl=36h

vga_disable_refresh();

// uses the FunctionID to look up chipset-specific 'VideoInit', 'SetBank'

// and 'BltSpecial' functions

resolve_procs();

// calls the chipset-specific VideoInit function

(*ptr_videoinit)();

latchCapableFlag = checkLatchCapability();

WritePrivateProfileString("svga256.drv", "LatchCapable", latchCapableFlag ? "Yes" : "No", "system.ini");

enabled_flag = 0xFF;

SetPalette(0, 256, &adPalette);

clear_framebuffer();

// this calls INT 10h with ax=1200h, bl=36h

vga_enable_refresh();

// this will be explained later

call_VDD_Set_Addresses();

// this calls INT 2Fh with ax=4000h, and will be explained later too

switch_to_background();

return (1, 0);

}So that's the code, which doesn't translate super cleanly into pseudo-C, but I've tried my best. A high-level overview of what it's doing:

- Record the current video mode before we do anything

- Check if a

svgamodeentry exists inSYSTEM.INI - If so, call

SetAndValidateModeto try that mode - If there was no entry or that mode failed, then go through each supported mode in turn until we find one that works

- If we chose the Western Digital mode and some opaque checks succeed, then switch to Cirrus Logic 542x

- If we chose the Tseng ET4000 mode and some other checks succeed, then switch to Cirrus Logic

- Save the new mode and chipset name to the

SYSTEM.INIfile - Call some chipset-specific initialisation code

- Check the 'latch capability', whatever that means, and write it to the INI as well

- Set the Windows palette

- Clear the framebuffer

- Set up the VDD

Now we're in a good position to ask - how does this interact with SVGAPatch? This code from Microsoft's driver is written to let them support a few different chipsets. But the point of SVGAPatch is to use the newer VBE standard, and in theory, support any video adapter that's VBE compliant!

This function itself doesn't change, but it's directly adjacent to the stuff that does.

SVGAPatch's Secret Sauce

When you run the patcher, it gives you some output that tells you what it's changed. Now I know what all the relevant code does.

Segment 3: addr=3AA0, size=917

Segment patched at offset 37E

Segment patched at offset 3D5

Segment patched at offset 42C

Segment patched at offset 481

Segment 11: addr=15240, size=7CD

Segment patched at offset 0The first 3 patches are setting the 'special function ID' for the very first chipset in each of the three lists (one for each supported resolution) to 2000.

The one at offset 481 is completely rewriting the SetAndValidateMode function. Finally, the one in Segment 11 is rewriting the chipset-specific functions with IDs 2000 and 2001.

So what's going on here? This patch is actually very simple, but pretty smart.

| Function | Original MS code | Replacement from SVGAPatch |

|---|---|---|

SetAndValidateMode |

- Use the VGA BIOS to try and set the video mode - Check whether it succeeded and whether the VGA adapter claims to be in the requested SVGA resolution |

Use VBE operation 4F02h to request an extended video mode depending on the configured resolution |

SETBANK_TRIDENT |

Write a non-standard value to 3C4h (the VGA Sequencer Address Register) |

Use VBE operation 4F05h to move the CPU's window to the video memory |

VIDEOINIT_TRIDENT |

Write to the VGA CRTC's Offset Register to set the amount of bytes between scan lines | Use VBE operation 4F06h to set the scan line length in bytes |

Finally, to tie this into physical_enable, the new logic ends up being as follows...

- Scan through all the supported modes in order, calling

SetAndValidateModefor each one - The first one will succeed, so it's picked by default

- Since the Function ID was replaced with 2000, the rewritten 'TRIDENT' chipset-specific functions are used

- Since the chipset name and mode values are now unused, we see the values for the 'Tseng ET4000' written to

SYSTEM.INI, just because it's the first one in the list

And with that, we've solved one piece of the puzzle - how SVGAPatch works. But we've still not answered the question: why do DOS prompts cause trouble?

Understanding the Virtual Display Device

It's time to go down yet another rabbit hole! There is a pretty good explanation of VDDs on the OS/2 Museum blog post that I linked earlier: Windows 3.x VDDVGA

TL;DR: DOS programs are written to expect exclusive access to the computer's hardware (including the video adapter), and Windows cleverly tricks them into talking to virtualised implementations instead.

The author was writing their own display driver and having trouble with DOS prompts (sound familiar?), and discovered that certain parts of the VGA registers were changing in unexpected ways when the mode changed inside a windowed DOS prompt ... which in theory, should not affect the real VGA adapter, as the GUI is supposed to have control over it.

They also note that a specific DspDrvr_Addresses function (which is located in the VDD and called from the display driver) doesn't actually do what the comments say it does, and that our SVGA256.DRV has special behaviour here.

A corollary is that passing 0FFFFh as the latch byte address to the VDD (something that SVGA256.DRV does) tells VDDVGA.386 that there is no video memory to share. In that situation, VDDVGA.386 does not try any hair-raising schemes to modify the VGA register state behind the display driver’s back.

Huh. (I'll pretend I know what that means.)

As with the display driver... the Windows DDK gives us the source code for the VGA VDD, but not for the SVGA one that we're using. So I delved into the SVGA VDD with two goals in mind:

- Find out what changes Microsoft made in between the VGA VDD and the SVGA VDD

- Is there chipset-specific behaviour hiding here that needs to be patched for generic VBE support?

- Try and learn what the deal is with

DspDrvr_Addresses

I painstakingly mapped out the functions in the VDD,. The majority of them were unchanged, but I did come across some interesting-looking differences, and I learned a lot about how the system works in the process.

Tangent: The VDD's Architecture

If we look at VDDCTL.ASM, we see a whole set of interesting entry points to the VDD - some of the more notable ones:

| Function | Purpose |

|---|---|

VDD_Device_Init |

Set up the System VM (The one that Windows itself runs in) |

VDD_Create_VM |

Set up another VM (for a DOS application) |

VDD_Set_Device_Focus |

Called when the active VM is switching |

Just to keep things spicy, there's also a bunch of external entry points in VDDSVC.ASM, and there's custom interrupt handlers in VDDINT.ASM.

Anyway, each VM gets its own instance of a big structure called VDD_CB_Struc, which is defined in VDDDEF.INC. This contains a whole lot of stuff, including:

- Various bitfields with all sorts of flags

- A complete mirror of the VGA controller's state

- Details of what video memory has been allocated by this particular VM

This whole system is impressively complex! There's a large VDDOEM.ASM file dedicated entirely to vendor-specific custom code, which is exactly what I was scared of...but we'll see how far we can get.

VDDTIO.ASM has a ton of logic that traps read/write accesses to the various VGA registers. I believe this is used by backgrounded VMs - so the active VM gets direct access, but any VM running in the background will just hit these routines and end up talking to the VDD's simulated VGA adapter.

Last but not least, but important here: The real-mode initialisation code found in VDD_Real_init has logic to detect various different VGA adapters, and it enables specific flags for them which get stored in the VDD_TFlags bitfield. These flags, in turn, influence other parts of the VDD's behaviour.

Many of these changes are just to teach the VDD to save, restore and simulate specific registers that only exist on certain adapters.

At this point I was feeling a little unsure about this project. If the VDD's state tracking requires this much micromanagement of the VGA adapter and its vendor-specific quirks, then I might just be fighting a battle that I can't win.

But I figured I could keep going and see if I can learn some more.

What's the deal with DspDrvr_Addresses?

There's a number of APIs provided by the VDD to the grabber. In 386/INCLUDE/VMDAVGA.INC we see these interesting definitions for new ones:

; New API's for 3.1 display drivers

Private_DspDrvr_1 EQU 0Ah

.errnz Private_DspDrvr_1 - GRB_Unlock_APP - 1

DspDrvr_Version EQU 0Bh

DspDrvr_Addresses EQU 0ChAnd if we look inside 386/VDDVGA/VDDSVC.ASM, we see how the VGA VDD handles these:

IFDEF DspDrvrRing0Hack

cmp cl,Private_DspDrvr_1

je VDD_Init_DspDrv_Ring0

ENDIF

cmp cl, DspDrvr_Version

je VDD_SVC_Dsp_Version

cmp cl, DspDrvr_Addresses

je VDD_SVC_Set_AddressesSuspicious. The first one seems to only be used by the IBM 8514 display driver, for a specific implementation of BitBlt. The second one just returns a version number. The third one is what we really care about.

Here's the comments from the VDDVGA implementation:

;******************************************************************************

;

; VDD_SVC_Set_Addresses

;

; DESCRIPTION: This service is called by the display driver BEFORE it

; does the INT 2Fh to indicate that it knows how to restore

; its screen. This service is used to tell us where we

; can interface with the display driver.

;

; One of the addresses passed is the location of a byte of

; video memory that we can safely use to save/restore latches.

;

; The 2nd address passed is the location of the flag byte

; used by the display driver to determine the availability

; and validity of the "save screen bits" area. The display

; driver copies portions of the visible screen to non-visible

; memory to save original contents when dialog boxes or menus

; area displayed. Since this is non-visible memory, it is

; subject to demand paging and be stolen for use by a

; different VM. If a page is stolen from the sys VM, then

; any data that was in the page is lost, because we don't

; maintain a copy, so we need to indicate to the display

; driver that the "save screen bits" area is now invalid.

;

;

; ENTRY: EBX = VM Handle

; EBP = Client stack frame ptr

; Client_AX = function #

; Client_BX = offset of address in display segment

; Client_DX is reserved and must be 0

; ClientDS:Client_SI -> shadow_mem_status

;

; EXIT: Client_AX is returned with a copy of Client_BX to

; indicate that the service is implemented

;

; USES: EAX, ECX, Flags

;

;==============================================================================Here's my best pseudo-C translation of the code and its comments, because I don't want to inflict more x86 assembly on anyone than is absolutely necessary. You deserve better.

extern void VDD_PH_Mem_Set_Sys_Latch_Addr(u32 eax, u8 cl, u8 ch, u32 edx);

void VDD_SVC_Set_Addresses(struct ClientStackFrame *s) {

struct VDD_CB_Struc *cb = SetVDDPtr();

u32 latchAddr; // eax

u8 latchBank; // cl

u8 firstVisiblePageInFirstBank; // ch

u32 visiblePagesInFirstBank; // edx

latchAddr = s->Client_BX;

s->Client_AX = latchAddr & 0xFFFF;

if (s->Client_DX

#ifdef TLVGA

&& (cb->VDD_TFlags & fVT_TL_ET4000)

#endif

)

{

VT_Flags &= ~fVT_SysVMin2ndBank;

cb->VDD_TFlags &= ~fVT_SysVMin2ndBank;

visiblePagesInFirstBank = 0;

firstVisiblePageInFirstBank = 0;

latchBank = s->Client_CL;

// Q: display driver put latch byte in different bank?

if (latchBank == 0) {

// N: we have to reserve a pg

visiblePagesInFirstBank++;

// in the 1st bank

firstVisiblePageInFirstBank = (latchAddr >> 8) & 0xFF;

// CH page # to reserve

firstVisiblePageInFirstBank >>= 4;

} else {

// Y: so no pages are required in 1st bank

}

} else {

firstVisiblePageInFirstBank = 0;

latchBank = 0;

visiblePagesInFirstBank = (latchAddr >> 12) + 1;

// this assumes latch page is last visible page

}

#endif

cb->VDD_Flags |= fVDD_DspDrvrAware;

VDD_PH_Mem_Set_Sys_Latch_Addr(latchAddr, latchBank, firstVisiblePageInFirstBank, visiblePagesInFirstBank);

Vid_Shadow_Mem_Status_Ptr = (s->Client_DS << 16) | s->Client_SI;

}So there's some weirdness going on here. The comment says that DX is reserved and must be 0. Yet, if DX is a non-zero value, this triggers special behaviour that reads from CL (which isn't even documented as being used)!

And this isn't used by any of the drivers in the DDK. Weird.

What about the SVGA version? Sadly I don't have comments for that, but I can at least disassemble the code and do a similar translation.

extern void VDD_PH_Mem_Set_Sys_Latch_Addr(u32 eax, u8 cl, u8 ch, u32 edx);

void VDD_SVC_Set_Addresses(struct ClientStackFrame *s) {

struct VDD_CB_Struc *cb = SetVDDPtr();

u32 latchAddr; // eax

u8 latchBank; // cl

u8 firstVisiblePageInFirstBank; // ch

u32 visiblePagesInFirstBank; // edx

latchAddr = s->Client_BX;

s->Client_AX = latchAddr & 0xFFFF;

if (s->Client_DX && s->Client_DX == 2) {

VT_Flags &= ~(fVT_SysVMnot1stBank | fVT_SysVMin2ndBank);

cb->VDD_TFlags &= ~(fVT_SysVMnot1stBank | fVT_SysVMin2ndBank);

VT_Flags |= fVT_0x400;

cb->VDD_TFlags |= fVT_0x400;

FUN_00013f60(&VDD_VM_Mem_Msg_Page_Handler);

latchBank = 0;

firstVisiblePageInFirstBank = 0;

visiblePagesInFirstBank = 16;

} else if (s->Client_DX && (cb->VDD_TFlags & fVT_TL_ET4000)) {

VT_Flags &= ~fVT_SysVMin2ndBank;

cb->VDD_TFlags &= ~fVT_SysVMin2ndBank;

visiblePagesInFirstBank = 0;

firstVisiblePageInFirstBank = 0;

latchBank = s->Client_CL;

// Q: display driver put latch byte in different bank?

if (latchBank == 0) {

// N: we have to reserve a pg

visiblePagesInFirstBank++;

// in the 1st bank

firstVisiblePageInFirstBank = (latchAddr >> 8) & 0xFF;

// CH page # to reserve

firstVisiblePageInFirstBank >>= 4;

} else {

// Y: so no pages are required in 1st bank

}

firstVisiblePageInFirstBank = 0;

latchBank = 0;

visiblePagesInFirstBank = (latchAddr >> 12) + 1;

// this assumes latch page is last visible page

}

#endif

cb->VDD_Flags |= fVDD_DspDrvrAware;

VDD_PH_Mem_Set_Sys_Latch_Addr(latchAddr, latchBank, firstVisiblePageInFirstBank, visiblePagesInFirstBank);

Vid_Shadow_Mem_Status_Ptr = (s->Client_DS << 16) | s->Client_SI;

}Broadly the same, but there's a brand new case for where the reserved field is set to 2. Also, the latchBank value is no longer used; the underlying logic in that file has changed quite a bit in ways I don't understand.

If I look at the code in the SVGA256 driver, it calls this function with:

BX(offset of address in display segment) is 0xFFFFDX(reserved field) is 2, for the new behaviourDS:SI(shadow mem status) is a pointer to a byte, which is not used by SVGA256 anywhere else

Well, that's good to know.

Where do I go from here?

I hoped that analysing both the driver and the VDD would lead me to something obvious that I could just patch, but this hasn't really happened. The VGA hardware may be older than I am, but that doesn't make it simple to understand :(

So I may have to get my paws dirty and do some debugging. Coming back to the OS/2 Museum blog post again, the author writes:

First I tried to find out what was even happening. Comparing bad/good VGA register state, I soon enough discovered that the sequencer registers contents changed, switching from chained to planar mode. This would not matter if the driver used the linear framebuffer to access video memory, but for good reasons it uses banking and accesses video memory through the A0000h aperture.

Can I use the same techniques? I don't really know how to debug things like this, but these issues are fully reproducible in DOSBox, so let's give it a shot.

Examining the Issues in DOSBox

I'm using DOSBox-X on my ARM MacBook. The version shipped by Homebrew doesn't seem to have debugging functionality, so I grabbed new binaries from their website, and now I get a helpful Debug menu.

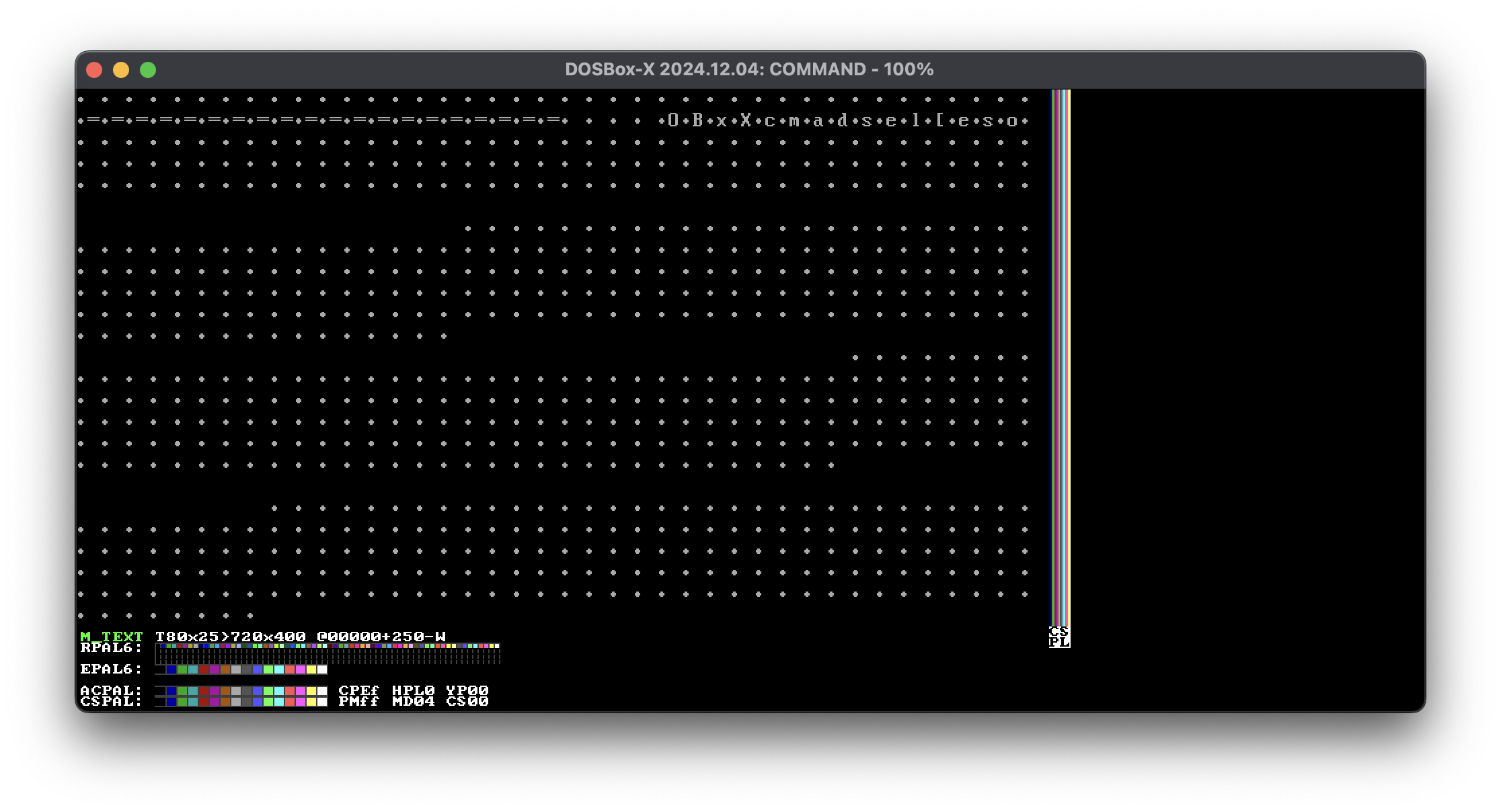

There is a Video debug overlay option which adds some cool visualisations of the VGA adapter's state, along with a bit of info.

If I open a full-screen prompt, press Alt+Enter to return to the (broken) GUI and then press Alt+Enter again to full-screen again, I get this mess. Absolutely cooked.

Comparing the silly little annotations, I see the following state changes:

| State | Silly little annotations |

|---|---|

| Functional GUI | M_LIN8 G800x600>800x600 @00000+100+Dch4 |

| Functional DOS | M_TEXT T80x25>720x400 @00000+050-W |

| Broken GUI | M_VGA G400x600>400x600 @00000+200-Dch4 |

| Broken DOS | M_TEXT T80x25>720x400 @00000+250-W |

What does this mean?? Not a clue.

I can definitely see that the state is going haywire, but I don't know who's responsible. Is there something missing in the patched display driver? Is the VDD corrupting the state? Both?

I had one hunch. I'd seen that the VDD has a lot of special behaviours for different cards; is it possible that one of these is unwittingly being activated and playing havoc? Say that the VDD mistakenly thinks I'm using a... oh, I don't know, "Trident" GPU, and tries to write to a Trident-specific register, but that register address does something else entirely in whatever DOSBox is currently emulating.

This could be an issue... but to know for sure, I'd need to figure out how to see what the value of VT_Flags is.

Viewing Driver Memory

The DOSBox Debugger has commands that let me view memory, but I had a problem - I didn't know where to look! I have no clue how Windows 3.11 maps memory for drivers, or how to even find that out.

My first idea was to just dump the entire system's memory and do a search for the "VDD " string which is always present in the VDD's memory. This would've worked, except for the fact that DOSBox-X's MEMDUMP command only works with logical addresses.

So I wound up with the slightly dirty solution of just recompiling DOSBox-X with that command tweaked. Sure enough, I dumped the first 32MB of physical addresses to a file, and I was able to find the driver's flags.

But this turned out to be a red herring. None of the vendor-specific flags are set; at least not in DOSBox. Oh well.

Analysing VGA Registers

I'll need to go a bit deeper. As it turns out, there's a whole set of commands I can use in the DOSBox debugger to print out fine details about the VGA state... basically every register.

I recreated the previous scenario and did this. If I compare all the registers between the 'good' and 'bad' full-screen DOS prompts, one thing sticks out: the field that DOSBox internally calls scan_len. This is used to calculate how many bytes to advance between each line on the screen. In the 'good' state it's 40, but in the 'bad' state it's 296.

What actually causes this, though??

This field can be updated in a few ways:

- Writes to the VGA CRTC Offset Register

- Writes to the S3 "CR43 Extended Mode" Register

- Writes to the S3 "Extended System Control 2" Register

- Calls to the VESA Scan Line API (the one used by SVGAPatch's changes!)

It gets even stranger if I begin with a windowed DOS prompt and look at the scan_len value. On a 'good' GUI, it's 128, but on a 'bad' GUI (after returning to windowed mode), it's 256.

DOSBox's implementation of the VESA API calculates scan_len differently based on what it thinks the current video mode is. This is pretty suspicious.

Since I'd already gone ahead and recompiled DOSBox myself, I figured I'd go further and just throw in some logging.

LOG_MSG("VESA_ScanLineLength(subcall=%d, val=%d, bytes=%d, pixels=%d, lines=%d)\n", subcall, val, bytes, pixels, lines);

LOG_MSG(" Current Mode: %s\n", mode_texts[CurMode->type]);And this gives me a smoking gun...!

VESA_ScanLineLength(subcall=2, val=1024, bytes=2, pixels=1024, lines=4768)

Current Mode: M_TEXTThe display driver is calling VBE operation 4F06h to set the scan line length to 1024 bytes, but DOSBox thinks we're in text mode, so the resulting field is wrong.

The obvious next step is to add logging for video mode changes. This is a little more annoying than it seems, because there's a few ways this can occur. To be thorough, I decide to add log messages to all of them.

This finally lets me assemble a crude timeline of events.

- Start Windows

M_TEXT-> Mode 12h (640x480 VGA)M_EGA-> Mode 10h (640x350 EGA)M_EGA-> Mode 3 (80x25 text)M_TEXT-> Mode 103h (800x600 SVGA)- ScanLineLength called: 1024 bytes, with the current mode being

M_LIN8

- So far so good - we have a working desktop now

- I open a full-screen DOS prompt

M_TEXT-> Mode 3 (80x25 text)

- So far so good - we have a working prompt, no anomalies

- I press Alt+Enter to return to windowed mode

M_TEXT-> Mode 30h (... what?!)- ScanLineLength called: 1024 bytes, with the current mode being

M_TEXT - Now the debugger says we're in mode

M_VGA?!

scan_lenis now corrupted, along with our display

There's three things going on here that we need to unpack:

- Something is asking for a mode switch to 30h (or decimal 48), which doesn't work as that is not a supported mode.

- The patched display driver tries to set the scanline length, but this corrupts the state because we're in text mode

- Yet we somehow end up in a graphics mode, without a switch?? What???

DOSBox has a global variable called CurMode which is updated every time the video mode changes through a call to INT 10h - either with the legacy video mode call from the IBM PC days, or the slightly more modern VESA/VBE API.

On the other hand, the debugger's VGA MODE command (which is what I'm using to introspect the state) is reading from DOSBox's vga.mode, which is a more low-level field in the display engine. This is computed from VGA registers.

So... I think this is the fault of the VDD! It's doing its thing and dutifully saving/restoring registers behind the scenes, but this leaves us out of sync when we try to use the higher-level VBE interface.

What about point 1? Who is trying to set the video mode to the invalid 30h, and why?

Well, this comes back to where we began. SVGAPatch hijacks the entries used by the Microsoft driver for the Tseng ET4000 chipset. For a resolution of 800x600, it uses the Tseng-specific mode 30h.

Lo and behold, if we look for calls to INT 10h in our patched display driver, we find a function that calls it.

So the VBE patch really is incomplete after all... 🤔

Fixing the Patch

I didn't want to just replace this function, I also wanted to understand how it fits into the whole system. So it's time to analyse how Windows 3.1 tells the driver about screen switches! Thankfully, this code is all present in the DDK's VGA driver, so that helps a lot.

Screen Switching

When the display driver is enabled, the hook_int_2F routine (from SSWITCH.ASM) is called. This gets pointers to a couple of functions from Windows DLLs, and also hooks the handler for INT 2Fh.

Remember, 2Fh is the 'Multiplex' interrupt, which lots of software can piggyback on. If a program wants to hook it, it has to store the address of the previous handler, and then call it for any requests that it didn't handle. This effectively creates a chain of hooks, eventually ending at whatever default was assigned by MS-DOS on boot.

This driver is no exception. The hook it uses is called screen_switch_hook.

The VGA driver is able to receive four commands via INT 2Fh, which are crudely documented as follows in the DDK:

SCREEN_SWITCH_OUT equ 4001h ;Moving 3xBox to background

SCREEN_SWITCH_IN equ 4002h ;Moving 3xBox to foreground

SAVE_DEV_REGS equ 4005h ;int 2f code to save registers

RES_DEV_REGS equ 4006h ;int 2f code to restore registers...but interestingly, only the two SCREEN_SWITCH_x commands are supported by the SVGA256 driver.

Anyway, both of these call three routines in order. For the OUT command, it's pre_switch_to_background, dev_to_background, and then post_switch_to_background. For the IN command, it's the same, but with _to_foreground routines instead.

All of these are pretty basic, except for dev_to_foreground, which is the one called when you switch back to Windows itself.

In the VGA driver's code (286/DISPLAY/4PLANE/3XSWITCH.ASM), dev_to_foreground is pretty simple - it calls INT 10h with ax=1002h to reset all the palette registers, then calls init_hw_regs to... set some stuff that I don't really get.

In the Video 7 driver's code from the DDK (286/DISPLAY/8PLANE/V7VGA/SRC/3XSWITCH.ASM), it's a bit spicier:

- Calls

farsetmode, which:- Sets the display mode

- Enables Video 7 VGA extensions

- Sets the line length

- Calls

far_set_dacsizeto set the DAC mode, whatever that means - Calls

setramdacto program the palette - Calls its own version of

init_hw_regs - Sets

enabled_flagto 0xFF - Calls

far_set_cursor_addr

So after looking at these, what do we see in the problematic SVGA256 driver?

- Calls

farsetmode, which:- Sets the display mode to 48 (!!)

- Calls the chipset-specific VideoInit routine

- Sets

enabled_flagto 0xFF - Calls the Windows API's

SetPaletteto set the palette

Herein lies our problem! There are two places where the video mode gets set, and SVGAPatch missed this one. It does call the VideoInit routine, so it uses the patched code to set the scanline length, but without being in the right video mode, it won't actually give us the right result.

Can we fix it?

Hopefully!

The original code just looks like this:

mov ax, [wGraphicsMode]

int 10h

call [ptr_videoinit]

retnThere's not much room to work with, but luckily, this is right after SetAndValidateMode, a function that was replaced by SVGAPatch with a much shorter version. So, I take the first byte right after the end of the new SetAndValidateMode, and inject some new assembly:

mov cx, [CurrentHeight]

dec cx

call SetAndValidateMode

call ptr_videoinit

retnSince SetAndValidateMode expects cx to contain the screen height (minus 1), I do that. Then I replace the first instruction of setmode with a simple jmp to my new code.

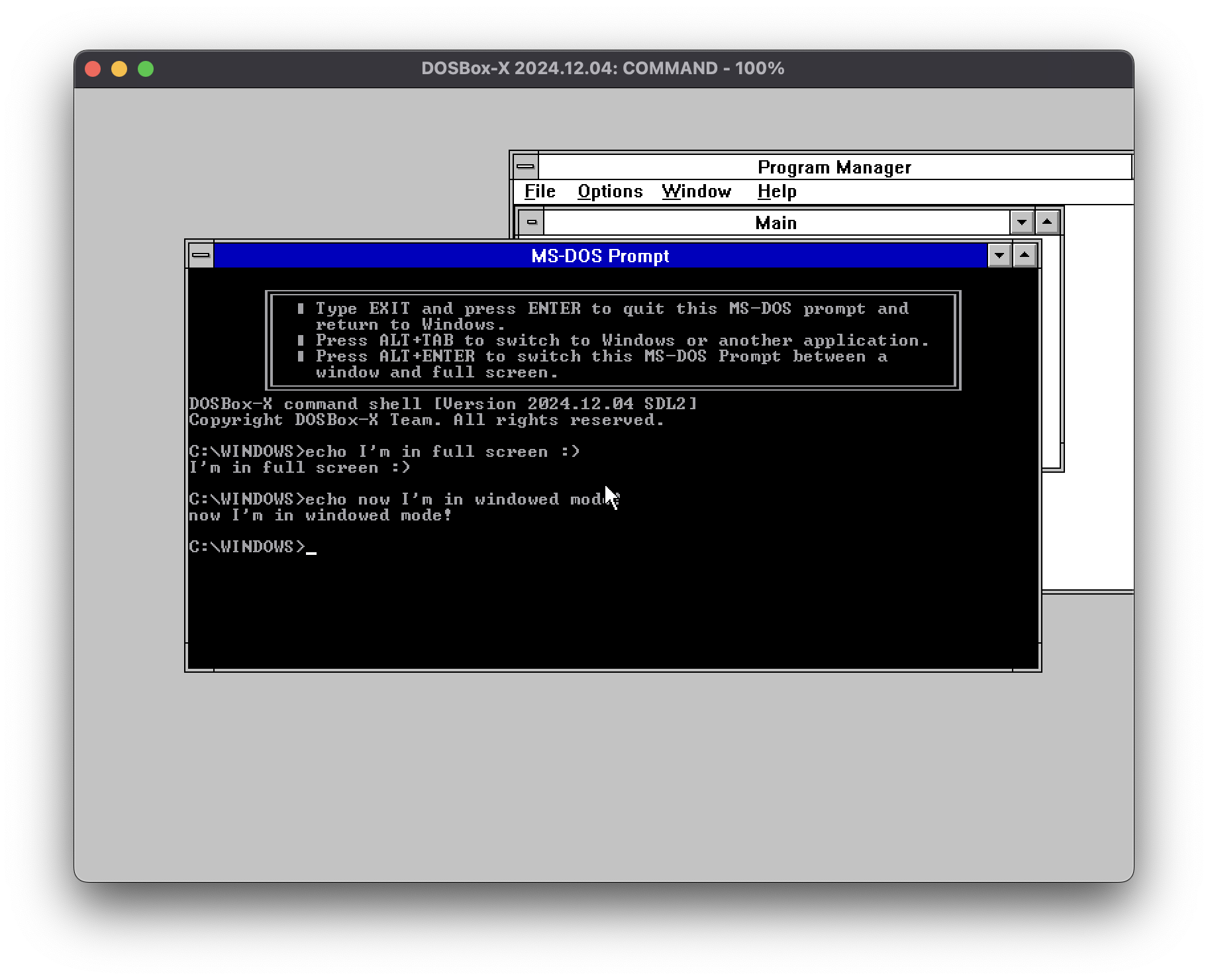

Lo and behold... the GUI no longer gets trashed after entering a full-screen DOS session!

One issue remains, though. If I go from windowed mode to full screen, the dots come back.

Register Debugging (Again)

I've tried to use my previous trick where I invoke all the DOSBox VGA debug commands to dump the state and I compare them, but this time I'm not getting anything useful.

Why am I getting nonsense? If I enter DP B8000 into the DOSBox debugger console to look at the VGA memory, I see that the text is all there - it's just not showing up!

There is an interesting DOSBox debug feature that lets you enter a command like VGA DS START 2 to override the VGA base address, and if I do this, it shifts the garbage around, but it's still the same garbage.

So then I thought... I know that the driver uses banking, so that it can access more video memory than is available with ye olde VGA. When it needs to read or write something outwith the range it currently sees, it'll call the SetBank function (which was patched by SVGAPatch) to ask for that window to be moved. Is this the problem?

Turns out, the bank configuration is stored in a separate structure that isn't covered by any of the debug commands. I add a quick and dirty command to dump these, and recompile DOSBox yet again.

else if (command == "SVGA") {

DEBUG_ShowMsg("VGA SVGA data: bankMask=%lu read_full=%lu write_full=%lu read=%u write=%u size=%lu",

(unsigned long)vga.svga.bankMask,

(unsigned long)vga.svga.bank_read_full,

(unsigned long)vga.svga.bank_write_full,

vga.svga.bank_read,

vga.svga.bank_write,

(unsigned long)vga.svga.bank_size

);

}Sure enough, when I return to full screen mode, the VGA adapter is stuck on the wrong bank!

Can we fix it? Again??

I've now gotten to the point in writing up this post where I've written about all the stuff I've done, and I haven't actually attempted to fix this yet. But you know what... screw it, I may as well try.

We've seen dev_to_foreground, which called farsetmode, which jumped to setmode, and fixing that made the GUI work. So, what if I modify dev_to_background to reset the bank to 0 as we're leaving the GUI?

All we've got in that routine right now is a simple mov [enabled_flag], 0. I've still got a bunch of free space over next to my silly little kludge... but it's in the wrong segment. If I want to add a new cross-segment call, I'll need to mess with the executable structure, and I honestly cannot be bothered.

So I spent a while trying to find something in the first segment that I could hack to shreds. Eventually I settled on the implementation of GetDriverResourceID, which is a function that's only used for the 120dpi ("Large Fonts") configuration.

First I need to bring it down to its simplest possible form:

push bp

mov bp, sp

mov ax, [bp+0Ah]

pop bp

retf 6This now leaves me a bit of space to write a new bank resetting routine, which should force the video adapter back to bank 0:

mov [ds:enabled_flag], 0

xor dx, dx

call set_bank_select

retnAnd finally, I jump to it from dev_to_background, so that it'll be called when I leave the GUI.

So with great trepidation, I try to run it, and... the OS doesn't crash! But it doesn't fix the issue, either :(

After a bunch of painstaking printf debugging I find out why... DOSBox implements VBE operation 4F05h (to set the 'bank') by writing to the VGA CRTC register 0x6A. I'm doing it from dev_to_background, but that routine is called too late - by that point, we've already switched away, and the VDD is trapping our writes.

This doesn't align with what I see in the Windows DDK's documentation:

Interrupt 2Fh Function 4001h

mov ax, 4001h ; Notify Background Switch int 2fhNotify Background Switch notifies a VM application that it is being switched to the background. The VM application can carry out any actions, but should do so within 1000ms. This is the amount of time the system waits before switching the application.

I have two options:

- I somehow find a way to trigger this earlier in the switching process

- Would be nice, but I clearly can't believe the docs, and I don't really want to try and disassemble more of Windows.

- I teach the VDD to let me pass these writes through, as Microsoft did for all the SVGA adapters they officially supported

- This would fix it for DOSBox, but that fix would be tied to whichever video adapter it's emulating. I don't want that, I'm trying to make the generic VBE patch work better!

- It's unlikely that my Eee PC's Intel GMA950 also uses CRTC register 0x6A for banks.

At this point, you know what... I think I'm done. It's almost midnight and I have to return to my day job tomorrow. (The Java™ cannot wait.)

... What now?

This was a fun little endeavour, and I've gotten surprisingly far, but I don't want to invest much more time into it - it's just something I wanted to poke at over the holiday season.

You know how brittle this whole setup is? Just for fun, let's go back to the original SVGA 256-colour driver from Microsoft, and its list of supported cards... and let's use 86Box to try out a few.

| Emulated Card | How it worked at 1024x768, 256 colours |

|---|---|

| ATI VGA Wonder XL | Failed to start Windows (ok, this might just be too new a variant) |

| Cirrus Logic GD5420 (ISA) | Works! ✅ |

| Oak OTI-077 | Screen corruption when initially opening a windowed DOS prompt, vertical lines when full-screening one |

| Paradise PVGA1A | Failed to start Windows |

| Trident TVGA 8900D | Screen corruption when full-screening a DOS prompt, but windowed is ok |

| Tseng Labs ET4000AX | Works! ✅ |

| Video 7 VGA 1024i (HT208) | Only works at 640x480, fails to start at any higher resolution |

I should caveat this with the fact that 86Box doesn't have some of the exact same cards, so that might lead to issues, and I don't know how accurate the emulation is for all of them. Still, this suggests that even the original support was already kind of dodgy - so I won't be too sad about not being able to make it perfect myself.

I also picked some of the newer cards in 86Box and tried them with my patched version of the patched driver, to see what would happen --

| Emulated Card | How it worked at 1024x768, 256 colours |

|---|---|

| Matrox Millennium II | Unbearably laggy. Windowed DOS prompts work, full-screen is broken. |

| 3dfx Voodoo Banshee | Opening a windowed DOS prompt corrupts the GUI, but switching to and from full-screen works perfectly. |

| S3 Trio3D/2X (362) | Beautiful glitch art when Windows first launches (as seen above!), but if you open a DOS prompt and then switch out of full-screen, you get perfect 1024x768. Oh, and full-screen is broken. |

| 3dfx Voodoo3 3500 SI | Same as the Banshee, but full-screen only works once and then it just breaks on any subsequent Alt+Enters. |

Last but not least... I'm sure you're dying to know, how far did I get with the Eee PC after all this faffery? The GUI works fine. Switching to a full-screen DOS prompt breaks, but in an interestingly different way to DOSBox. While on DOSBox I get text mode with lots of corrupted characters, on the Eee PC I get a broken version of the GUI where some of the colours have disappeared.

However, the saving grace is that you can just switch to windowed mode again and it recovers OK.

If you think that looks cool, then here's a fun one I got when I was messing around with the text mode blue screen that you get when you press Ctrl+Alt+Del - somehow I got it to corrupt the VM's video memory, and the results stuck around enough to get rendered at glorious 1024x600 by the Grabber.

And you know what - glitch art aside... my updated driver may not be perfect, but it's already a huge improvement over the original SVGAPatch, where simply opening a prompt (even in a window) would break the entire GUI and require me to restart the OS. I'll take that W.

For anything better than that, I'll continue to keep an eye on PluMGMK/vbesvga.drv, which is being actively developed and written by someone that actually knows what they're doing when it comes to 16-bit PC dev and video hardware.

I hope you enjoyed this adventure :3 I had fun, but I'm definitely ready to move onto something else now. Maybe I'll even write the post I originally planned on writing, about how to set up Windows 3.x and 9x on the Eee PC. Maybe.

If you liked it, you can subscribe to this blog for more infrequent tech nonsense, or follow me on your favourite microblogging platform (though I don't actually toot/skeet/tweet about tech all that much).

Previous Post: Free Software that you can't customise is not truly Free Software

Next Post: Why I remain a GenAI sceptic